Feeding Food

This is a draft (completed in October 2020) of a piece that was never published. I'm posting it here for anyone who might be interested. The food system is a massive and important topic, and here I've only scratched the surface of one small part of it. Researching and writing this shifted my thinking on many topics—I hope others will also get something from it.

Limit Break

Nowhere in the world do we see limitless growth. In agriculture, as in the broader natural world, there are nutritional limits that prevent plants and the life that depends on them from growing unbounded. Two limiting nutrients of special importance are nitrogen and phosphorus. Together these form a bottleneck for agricultural systems, with implications not only for food and other agricultural products but also for key features of a post-carbon world, such as bio-based alternatives to fossil fuels and their derivatives and afforestation.

Nitrogen is everywhere, making almost 80% of the air that surrounds us, which is why at first glance its role as a limiter might seem strange. Unlike carbon dioxide, the nitrogen in the air is inaccessible to plants. There are a variety of ways it can be made available to plants, such as through certain "nitrogen-fixing" bacteria or, much less commonly, lightning strikes, but something has to convert nitrogen into its inorganic form (e.g. ammonia) for plants to make use of it5354.

Phosphorus, more commonly a limiting factor in tropical areas55, is not as abundant as nitrogen, circulating through the gradual weathering of rocks.

Though many cultures found ways to better conserve soil nutrients and modestly supplement the nutritional content of soils through manures or legumes (which host nitrogen-fixing bacteria)56, it wasn't until the 19th century that a series of shifts occurred that effectively broke through these limits: the development of global fertilizer supply chains and the Haber-Bosch process for synthesizing nitrogen fertilizer at scale, named for Fritz Haber (sometimes called "the father of chemical warfare") who developed the process and Carl Bosch who later improved it. This process is still the basis for essentially all synthetic fertilizer production today. Nowadays the amount of reactive nitrogen and phosphorus circulating is more than double that of the pre-industrial world57585960.

These developments proliferated over the past century—use rates of nitrogen fertilizer increased over 40 times in the US over this period61—massively increasing global agricultural productivity, tripling agricultural value since 1970 to $2.6 trillion62. In the US synthetic fertilizer may be responsible for anywhere from 30 to 60% of yields, and even higher in the tropics6364. One of the most important consequences of the surpassing of these limits has been exponential population growth. Some 40% to half of the world population may owe their existence to the Haber-Bosch process54.

This massive nutritional increase comes at a substantial social and environmental cost and, at least in its current form, is unsustainable. The breaking of these nutrient limits is a major contributor to the breaking of planetary boundaries65: a recent paper estimated that almost 50% of global food production violates planetary boundaries, with almost of half to that (25% overall) due nitrogen fertilizer17.

Nitrogen fertilizers are typically applied in excess59; for cereal grain production only about a third of applied nitrogen is actually taken up the crop, amounting to \$90 billion of wasted nitrogen66, with some estimates ranging from half54 to 80%5767 being wasted across all crops. Similarly, estimates suggest only 15 to 30% of phosphorus is taken up686968. But a lot of this excess fertilizer leaches into water systems5465707172, causing eutrophication that has contributed to over 400 marine dead zones69. In the Gulf of Mexico such pollution from US agriculture costs the economy an estimated $1.4 billion annually by undermining fishing, recreation, and other marine activity7173. This overflow also contributes to the flourishing of pathogens like the West Nile virus74. Phosphorus fertilizers also contribute to heavy metal content in agriculture69, as much as 60% of cadmium in crops and soil757668, some of which also makes it to water sources76. In the US, agriculture is responsible for 70% of the total nitrogen and phosphorus pollution67.

This water pollution also has direct effects on human health, rendering drinking water toxic6961. Nitrate contamination is linked to many different diseases—blue baby syndrome, some cancers, and more. Violations of EPA limits of nitrate content in drinking water doubled from 650 in 1998 to 1,200 in 2008, while 2 million private wells are above the EPA-recommended standard74. This pollution is expensive to treat and the cost is usually borne by the public. The cost of a treatment facility can be hundreds of millions of dollars; the estimated cost for agriculture's share of the pollution is around \$1.7 billion annually74. The total cost of the environmental and health harm related to agricultural nitrogen in the US has been estimated at $157 billion per year74.

Nitrogen fertilizer overapplication also contributes to agricultural greenhouse gas emissions, especially N2O706654 which is now the main contributor to ozone depletion61. The amount of these emissions is estimated to increase exponentially with increased fertilizer usage77.

Other concerns regarding over-application—some also with any application of synthetic fertilizers—is their impact on soil health54, such as soil acidification2871 and net losses in soil nitrogen over time (meaning synthetic fertilizers' effectiveness decreases over time)6678. While disputed, some argue that synthetic fertilizer also inhibits soil's ability to sequester carbon64787980. What is more accepted is that more traditional techniques of maintaining soil fertility, which are often abandoned with the introduction of synthetic fertilizer28, improve carbon sequestration8182.

Of course, because the issue here is over-application—for example, China can use 30-50% less fertilizer and maintain the same yields and corn farmers in Minnesota reduced nitrogen use by 21% without a decrease in yield71—then the solution should be straightforward: use less of it. Recommendations to avoid fertilizer overapplication are generally about better management, more context-specific application (e.g. depending on what's already available in the soil and what the crop requires, carefully measuring out how much to apply)6028408371168485575474. This approach has been successful—in Denmark, for example, nitrogen fertilizer application decreased 52% since 1985 following the Nitrate Directive in 1987 which prescribes specific nitrogen management practices71. Similarly, a simple one fertilizer management program based on color charts successfully reduced fertilizer N use by about 25%57. The US has also seen improved nitrogen fertilizer efficiency through education initiatives59, but stronger regulation regarding synthetic fertilizer application is typically met with fierce resistance from farm lobbying groups61.

Other recommendations look a bit more deeply into why farmers over-apply fertilizer to begin with, especially around risk and incentives. Crop insurance appears to have some effect on fertilizer application rates, though there isn't consensus around their types and strength. One position argues that crop insurance programs encourage the planting of more input-intensive crops because they are better covered by the insurance, thus increasing fertilizer application; another argues that fertilizer over-application is a risk mitigation strategy (better to over-apply than under-apply and have a small harvest) that becomes redundant with crop insurance347274837186. Another position argues that crop insurance encourages agricultural expansion onto lands that are otherwise ill-suited for it—with greater environmental consequences—because crop insurance mitigates the risk of poor yields41. Some empirical studies have found that enrollment in crop insurance programs does increase the use of fertilizer3487 and water88 and does influence land use and crop choice (e.g. towards more nutrient-demanding crops)9, but others have found no effect or the opposite effect on fertilizer use8872.

In the case of the USDA, applications for assistance require that the applicant demonstrate genuine attempts to increase yields, which generally means applying fertilizers and adopting other conventional agriculture techniques. Such requirements are implemented out of concerns of gaming the system89. Because crop insurance is subsidized in the US, taxpayers essentially finance the consequent environmental destruction, while also suffering the consequences of water pollution, depletion of fish stocks, and more7489.

There are, however, environmental concerns beyond over-application, especially around the production of both nitrogen and phosphorus fertilizers.

Nitrogen fertilizer production—i.e. the Haber-Bosch process—is a large source of agriculture's environmental footprint82. The process requires a hydrocarbon source, usually natural gas, which is combined with nitrogen from the air90919216 and is also used to provide the required heat and energy. The natural gas is supposed to be used up by the process, such that methane emissions are minimal—but the actual emissions have been found to be over 145 times higher than reported93.

The production process also emits significant amounts of CO293; in 2011, this accounted for 25 million tons of greenhouse gases in the US, some 14% of the emissions for the entire chemical industry92. A huge chunk of agricultural emissions are actually attributable to fertilizer production due to these CO2 emissions - by one estimate, 30-33%92.

There are other problems from fertilizer production, such as pollution contributing to an estimated 4,300 premature deaths per year61 and substantial energy demands, accounting for an estimated third of total energy used in crop production16.

Phosphorus fertilizers have their own problems. The extraction and processing of the phosphate rock from which the fertilizer is produced come with the typical environmental issues of mining: water and air pollution, land degradation, erosion, toxic and occasionally radioactive byproducts (e.g. phosphogypsum)94952332966968. However, compared to the nitrogen fertilizer production process, phosphorus fertilizer requires a relatively modest amount of energy (about five times less than nitrogen fertilizer)16. Like nitrogen fertilizer, however, the process is dependent on the fossil fuel industry for inputs, namely sulfuric acid supplied as a byproduct from the oil and gas industry58. Both nitrogen and phosphorus fertilizer productions keep agriculture tightly linked to the fossil fuel industries we need to move beyond.

With phosphorus fertilizers one impending issue is phosphate rock's finite availability. No equivalent to the Haber-Bosch process exists for phosphorus fertilizer42, so it will continue to rely mostly on mined phosphate rock—about 96% of mined phosphate rock goes to fertilizer7010 and this accounts for about 60% of phosphorus applied to agriculture (the rest is from recycled sources like manure)1068.

As with other non-renewable resources, there are concerns around "peak phosphorus"451068. This is an inevitability given that it is a finite resource, but of course when this will occur is difficult to predict as anticipating actual reserves of any mineral is hard and estimates change frequently and by large amounts and according to economic and technological circumstances97451058. Estimates range from within a couple decades to hundreds of years684510.

At present the US is a major producer of phosphate rock, primarily in Florida, but that supply is expected to be 60% depleted by 2030 and completely gone mid-century45. China, another major producer, is expected to deplete in the near future as well10.

The Metabolic Rift

All of these issues of the present pour out of a deeper history of fertilizer that represents a more fundamental shift in our relationship with nature. This "metabolic rift" is the sundering of agriculture from its local ecological context98, and has at its core exploitation, colonialism, and imperialism2399100. The siphoning of fertility from the global periphery into Europe and its colonial descendants was foundational to pushing agricultural productivity beyond the limits of what the land could sustain.

Fertilizer itself was a common practice to maintain soil fertility while keeping it in production99 but it took the form of night soil, ashes, bones, and other organic waste that were relatively local, often connecting rural and urban populations through substantial logistics systems275468. But such methods could only maintain continuous productivity for so long; nutrient recycling is not perfect8254, and indeed soil depletion became an issue in industrializing nations as populations grew58. Alternative methods such as leaving land fallow or crop rotation did not fit the need for maximizing near-term productivity.

To resolve this dilemma, in the 19th century industrializing nations began sourcing fertilizer from distant places—mainly Peru, but also Chile, Ecuador, and Bolivia, in the form of guano and later nitrates996827100. This "Guano Age" saw a tremendous transfer of wealth to British business interests that essentially controlled this trade10027 as well as many deaths through horrendous slave-like working conditions of laborers from China and throughout Latin America2710199 and through multiple wars, including one following Spain's seizures of guano-rich islands100, a proxy war funded by the British so they could essentially annex Bolivian and Peruvian nitrate and guano producing regions10027, and a civil war in Chile also funded by the British to prevent Chile's nationalization of its fertilizer resources100 (Gregory Cushman's Guano and the opening of the Pacific world: a global ecological history provides a detailed look into this history).

Many Pacific Islands also saw their share of dispossession and violence. The US sought to secure its own guano deposits, passing the Guano Islands Act in 1856 to unilaterally allow US entrepreneurs to claim new guano islands as US territories27. Under this act 66 islands were claimed as US territory99, with at least 8 still under claim or in dispute. Other colonial powers followed suit, compelling a flurry of claims throughout the Pacific99 that devastated the islands unfortunate enough to be endowed with rich deposits. Two such islands, Banaba and Nauru, were key locations for the mining of phosphate rock. The Banabans, after years of violence and occupation, were eventually resettled after about 90% of their home island's surface was left mined and the trees they relied on for food essentially gone2210210399. Nauru didn't fare much better, with 80% of the surface left looking like a 'moonscape'104102. While Nauru achieved independence in 1968, they are left in a state of economic despair103.

The picture looks very different for the beneficiaries of this extractivism. The vast majority of the nutrient wealth extracted from these regions went to European and descendant countries such as the US, Australia, and New Zealand. European-style agriculture was unviable in Australia and New Zealand until imports of fertilizer derived from the Pacific islands turned each into exporters of meat and wool99103 . The fertilizer that resulted allowed the agricultural expansion that supported the industrial development of these countries27. Peru, like Nauru and Banaba, saw little benefit. With guano supplies basically depleted, Peru's farmers became dependent on different nutrient supply chains: imported synthetic fertilizers99.

For nitrogen fertilizer the most egregious cases of this imperialist exploitation came to an end with the Haber-Bosch process27, which as discussed above is not without its own significant problems and is still, with its reliance on fossil fuels, fundamentally extractivist. For other nutrients, especially phosphorus, this is not the case. While there Nauru and Banaba's phosphate resources are basically depleted, North Africa is still a site of phosphate mining to this day. In particular, the case of Morocco and Western Sahara shows a clear through-line from fertilizer's broader colonial history to its continuation in the present.

Under French control the region supplied phosphate for France's agriculture101. Morocco continues to be the leading exporter of phosphate with the largest reserves105, with estimates of up to 75%-85% of the world total reserves106426997 . Yet some of these reserves that are counted as Morocco's are not in fact in Morocco. They are in Western Sahara, what is sometimes called the "world's last colony"10739. Morocco has occupied Western Saharan since 197542107, with about 80% of the country under Moroccan rule108 under what is best described as a police state, with violence against and killings of activists, politically-motivated imprisonment, and other human rights violations4210911011110810739, with hundreds of Sahrawi "disappeared" and tortured by the Moroccan government10839. Most Sahrawi fled to Algeria, where they still mostly live in refugee camps4210810739.

This occupation is at least in part motivated by Western Sahara's phosphate reserves, which is of uniquely high quality107105. Some 10% of Moroccan phosphate income comes from the Bou Craa mine in Western Sahara42 and makes up most of the income Morocco gets from the region75 (fishing is another big source11210839). As of 2015, Morocco has made an estimated profits of $4.27 billion from Western Saharan phosphate rock mines69.

The UN and the International Court of Justice both recognize Western Sahara's right to self-determination10839, and no country officially recognizes the Moroccan occupation as legitimate75107105. Extraction of resources from an occupied territory is clear violation of international law107, which determines that the Sahrawi people should have "permanent sovereignty over [their] resources"39. Yet, over fifty years since this promise of self-determination, as countries continue to import Western Saharan exports10575, has not materialized in any meaningful way.

This is probably because several countries have a vested interest in Morocco. Morocco an important ally to US and the recipient of the most US foreign assistance in the region108107, including financial support and training for the Moroccan military and its operations against the Polisario Front108105, the Sahrawi nationalist movement. The US also helped plan the initial Moroccan invasion of Western Sahara; Kissinger feared communist activity in the area108105. Morocco has suggested using its phosphate production as a lever for protecting its claim to Western Sahara—to pressure Russia, for example75. In 1985, India recognized the independent Sahrawi state, the Sahrawi Arab Democratic Republic (SADR), but withdrew recognition in 2000 when they established a joint venture with the Morocco phosphate industry107.

Fertilizer production elsewhere also depends on phosphate exported from the area. 50% of the Bou Craa mine's output supplies fertilizer producers in North America, though in 2018 Canada-based Nutrien, the major North American importer, stopped accepting imports from the Bou Craa mine75. Other major importing countries are India, New Zealand, and China75. There have been some recent victories, however. In 2017, the Sahrawi Arab Democratic Republic successfully asserted a claim to a cargo of Bou Craa phosphate rock which was sold to New Zealand-based Ballance Agri-Nutrients75.

As phosphate rock supplies dwindle elsewhere, production from places like Morocco become even more important. Some projections say that Morocco's market share could increase to 80-90% by 20306910, effectively giving Morocco monopoly over the global phosphate market and a greater investment in maintaining control of Western Saharan deposits.

From all this it's clear that the current use of synthetic and non-renewable fertilizers is unsustainable. Yet at the start of the millennium Vaclav Smil concluded that we are fated, at least for the next century, to depend on Haber-Bosch54, and presumably the system of agriculture that is built on it. Have things changed in the intervening decades? Do we have better options now?

There are not many options for substituting phosphate rock (for phosphorus fertilizers the main approaches are using less or exploiting excess accumulated "legacy" phosphorus113), so the focus here will be on nitrogen fertilizers. The main options can be roughly grouped into two categories. There are those which move away from synthetic inputs towards more "natural" approaches, such as organic farming systems, and those which more or less stick to the industrial-chemical model but substitute Haber-Bosch for different processes. Both of these feature into utopian visions of future food systems.

Fertilizers By Other Names

Natural Factories? Organic Systems

As alluded to above, the major differentiator of agricultural systems' impacts is nutrient management and the nutrient bottleneck6511482115116549955. Organic systems forgo synthetic fertilizers and other synthetic chemical inputs (though there are circumstances where some may be allowed). Beyond that there can be quite a lot of variety among specific organic systems. More traditional techniques such as intercropping can be used, but aren't required for the organic label to apply114.

In lieu of synthetic fertilizers, other fertilizers like manure are used. These can be less consistent than synthetic fertilizers, as they can have a lot of variety in nutritional composition14 and also require additional microbial action before the nutrients are available to the plants (in synthetic fertilizers they're immediately available, for better or worse)16115. But, in addition to the lower environmental footprint, they have other advantages over synthetic fertilizers. Whereas synthetic fertilizers can lower the pest resistance of plants—thus requiring pesticides117118—organic fertilizers tend not to have this problem119120. They may also better contribute to soil carbon sequestration8165 and improve soil quality in other ways121.

The benefits of organic fertilizers are somewhat tempered when taking into account the impacts of collection and transportation. A farm using organic fertilizer produced on-site is going to require less energy than one that ships organic fertilizer from some distant elsewhere. The reduced nutritional density of organic fertilizers also means that transport energy expenditure on a nutritional basis can be higher as well. But in general because no energy-intensive production process is required, the energy requirements are still substantially less than synthetic fertilizers16.

The debate around the viability of wholesale replacement of conventional agriculture with organic systems inevitably ends up on the problem of the "yield gap"—that is, the difficulty organic systems have of matching the productivity of conventional systems.

Comparing organic and conventional agriculture systems is complicated. There are so many other context-specific factors that influence their relative performance, such as the particular crop, the growing environment, water availability, labor costs, and so on, and these factors may be related in zero-sum ways that by now are familiar: yield increases as N fertilizer use increases, but at cost of water pollution12212355. Comparisons may use a variety of crop specifically bred to maximize productivity under conventional systems, at the expense of traits which make them more productive under organic systems11482. Comparisons can be further complicated by histories of colonialism and displacement, where the highest-quality land is captured by large-scale operations that also happen to utilize industrial agriculture techniques, thus further contributing to higher yields. Larger gaps are found when cereal crops are compared, but this might be because Green Revolution technologies have focused on improving cereal yields in particular114. This is part of a larger trend: agricultural research largely focuses on techniques and technologies applicable in conventional systems, whereas organic and sustainable techniques are relatively underfunded58. The yield gap could be closed further with more research and funding.

One meta-analysis over several hundred agricultural systems—biased towards Western agricultural systems, including the most industrialized systems—concluded that organic systems fall short against conventional systems when compared on a yield basis; i.e. organic systems require more land, result in more eutrophication, and have similar greenhouse gas emissions per unit of food produced6582124. The yield gap ranged from a concerning 50% to a more modest ~90% of conventional output82114. Use of well-known techniques like cover cropping and intercropping can further close the gap6511414, but the gap could also widen under the effects of climate change124.

When this yield gap is taken into account, many of the environmental benefits of organic agriculture are severely reduced to the point where conventional agriculture looks more environmentally friendly. The difference is usually attributed to the fact that organic methods require much more land to achieve the same output124. Thus, for example, benefits in soil carbon sequestration are offset by the increase in deforestation from expansion of agricultural lands12565123. These comparisons, however, use life-cycle assessments (LCA) that are often biased towards the conventional conception of agriculture—that is, focused on agricultural outputs as products—failing to account for the very different conception from organic and other alternative forms that prioritize ecosystem health and have longer-term outlooks. For example, the effects of pesticides, though a key difference between organic and intensive systems, is often not considered126. Land degradation is also generally not included in these assessments126. On a long enough time scale the land degradation resulting from conventional agriculture undermines agricultural productivity (thus lowering these efficiency benefits from the LCA perspective)79. We might also consider that, though perhaps industrial agriculture theoretically has lower land use requirements, the political economy of industrial agriculture is such that it will expand regardless of this land-use efficiency. Organic agriculture, as co-opted by existing agribusiness, is not itself necessarily immune to this.

Such results by no means indicate that conventional agriculture is "sustainable" (especially considering its dependency on finite resources such as phosphate rock) or that it's "low-impact" (still requiring more energy than organic and with human health consequences from pesticides, water pollution, and so on)65. In any case, the prospect that industrial agriculture, which drives all the issues described above, is somehow better for the environment is a troubling if counterintuitive finding. Are there other newer approaches that resolve this dilemma? Ones that are both high-yielding and less harmful?

Take It Inside: Indoor Systems

One common proposal for improving agriculture—in terms of both yields and impacts—is to challenge its relationship to land. This set of agricultural systems fit neatly into narratives of high-tech progress, including hydroponics, aeroponics, aquaponics, and vertical farming. Hydroponics and aeroponics are two prevailing soil-less growing techniques, in which plants are grown out of water (in hydroponics and aquaponics) or nothing at all (aeroponics), and vertical farming can operate on either. These roughly all fall under the umbrella of indoor farming techniques (they don't need to be indoor, but they almost always are), which also encompasses more "traditional" greenhouse approaches, but has a more potent contemporary imaginary in the form of extremely high yield per acre operations on otherwise non-arable land, with tightly-controlled environments and factory-like efficiency (some of these methods are grouped under PFALs: "plant factories with artificial lighting"127 or just "plant factories" as artificial lighting is usually a given).

Within the -ponic techniques is substantial variety: the exact method can vary (hydroponic, for example, includes "deep water culture" and "nutrient film technique" among others), as can specifics of the configuration (though these techniques are usually associated with artificial lighting, they can also use sunlight), and growing environment (higher energy requirements, for example, in a colder climate)12865, and the specific crop grown, so generalizations can be a little tricky. But there is enough in common across these approaches that some comparisons are possible.

In terms of yields, hydroponic systems can have higher yields than conventional open-field systems367. In the context of urban agriculture, a substantial amount of a city's food demand can be met with local rooftop farms: for example an estimated 77% of Bologna's food demand is met in this way129. A recent study on urban horticulture in the UK (Sheffield) suggests that the expansion of rooftop farms could help meet a substantial amount of the city's fruit and vegetable needs130.

With respect to fertilizer's environmental impacts, these configurations feature closed water systems, meaning that water is used more effectively and recycled. This reduces both water usage and nutrient runoff and improves nutrient efficiency compared to conventional systems31311326713313425127. However, there is still potential for mismanagement and downstream pollution, as salt accumulation in the circulating nutrient solution still results in wastewater that must be properly disposed of128129.

A closed system can be more self-sustaining in other ways, such as using biomass waste to generate biogas to heat the greenhouse or using anaerobic digestion to produce fertilizer on-site128. Aquaponics generally avoids the use of synthetic fertilizers because fish waste provides nutrients13313567, though this does not necessarily mean a complete independence from synthetic fertilizers: fish feed is still an external input, and some setups may require nutritional supplements67.

Advocates of these systems claim they don't require pesticides, but indoor livestock farming was pitched on similar promises that haven't panned out136. Avoiding pesticide use is even more crucial when these farms are in cities129, so this claim warrants even more scrutiny than in other contexts.

These systems can have lower emissions1323, with most emissions coming from the facility structure (steel and concrete)132127, other fixed infrastructure (e.g. pipes)1343129132, and energy use25137, though this varies with energy mix. One way to reduce emissions and other impacts from the facility structure is to re-use existing buildings, though this comes with penalties as the buildings are not tailored specifically for indoor agriculture12925.

Perhaps one of the most lauded benefits is versatility in location—indoor methods don't require arable land137. Indeed, they can require substantially less land even when considering the land use for energy sources. One paper estimates 1:3 ratio of greenhouse area to solar panel area and assumes "a conversion efficiency of 14% and an average daily solar radiation value of 6.5 kWh per square meter per year"5. Using sunlight rather than artificial lighting is even better—one could imagine reclaiming the top floors of abandoned buildings to augment local food production.

From a financial perspective, these indoor systems tend to have high capital costs1371381331395. This includes not only equipment costs, but also the land itself, which in urban centers are expensive compared to rural land, and operating costs139—you pay for what is otherwise provided for free, e.g. sunlight. They tend to grow only leafy green and herbs for business model viability138136, especially because conventional agriculture can still produce the same food for much more cheaply.

Even though the arability of the farm site itself is less important, other geographical aspects still are. For example, where water is particularly expensive (e.g. in Egypt where deep wells must be dug and maintained133), the cost of starting an aquaponics farm is still quite expensive133. Of course, there are situations where the high cost may make sense if it's not possible to grow anything otherwise and other land uses don't make sense1395.

The climate of the site is another important factor. Hydroponics systems can have tremendously more energy requirements compared to conventional agriculture, varying a lot by climate (cooler climates require more heating, for example), which can be disproportionate to yield increases56514013412867. Lighting is the other big energy factor, but climate control is the cause for most of the variation between hydroponic systems25512913767. If the system is such that it has exposure to natural light (e.g. rooftop gardens), then the energy demand is of course further reduced.

In urban environments, these systems can have several additional benefits, depending on how tightly they're integrated with existing urban processes13712825127. The proximity to consumers can reduce impacts from packaging and transport129127, as well as minimizing food loss as well129137. Integration with rainwater harvesting systems can reduce draw on city water supplies12967, and wastewater systems can potentially be developed as nutrient solutions, rather than relying on synthetic fertilizers128. This could reduce the energy used in wastewater treatment, as the nutrients currently processed out to avoid eutrophication can be used to feed plants instead141.

But the fact that indoor methods don't require arable land is a little deceiving. Like conventional agriculture, arability still must be "imported" via inputs like synthetic fertilizers, so in some respects environmental impacts are merely dislocated from the site of production rather than altogether eliminated. Indoor methods are generally not designed to use organic fertilizers, as organic fertilizers are less consistent, less nutritionally dense, and often require some kind of microbial action to make the nutrients available to the plant (the exception here is aquaponics, which is designed around fish waste as fertilizer). For indoor systems, fertilizer production is still the major contributor to eutrophication1293132137. Even though less of this occurs at the site of use, plenty still occurs along the production and distribution chain.

So while these indoor approaches appear to have many benefits and may have a role to play in food production for a limited set of contexts140 (where energy costs are favorable, renewable energy sources are used, the high capital requirements are manageable, and to supplement the diets of land-scarce urban centers), but we shouldn't expect them to replace agriculture wholesale. And they do not necessarily lend themselves to socially-desirable models of production. One could imagine a near future in which insolvent smallholder farms on already marginal lands are displaced for investors to build towering vertical farms in their place.

With regards to fertilizer, they're still held back by many of the problems with synthetic fertilizers—especially their production. Are we stuck with the Haber-Bosch process? Is there a cleaner way of producing them?

Other Routes to Nitrogen

Though the Haber-Bosch process is the dominant way of producing nitrogen fertilizer, there are a variety of potential alternatives14230. These alternatives usually focus on Haber-Bosch's use of fossil feedstock and high energy requirements, stemming from its need for very high temperatures (300-500C) and pressures (200-300atm)37—"the single most energy-guzzling element of farming"143. These intense demands contrast to the microbes that fix nitrogen under far less extreme temperatures and pressures due to nitrogenase enzymes37.

Naturally, the promise of these nitrogenase enzymes has attracted research interest. One direction is focused on producing nitrogen fertilizer with solar power, at room temperature and normal pressure using these enzymes 143144, though at present the process is slower than the Haber-Bosch process24. There is also more recent research into methods using primarily water (for the hydrogen, that is normally supplied by fossil fuels in the Haber-Bosch process5837), air, and electricity ("primarily" because the process may require expensive metals like palladium as a catalyst145) to create small fertilizer synthesizing devices. Other "solar" fertilizers are similarly produced from water and air but use solar energy directly, rather than through electricity. These also tend to produce more dilute fertilizers that can help avoid overapplication146. This could take the form of relatively small, low-maintenance devices—"artificial leaves"147—that are amendable to decentralized production146 37. A decentralized model has several advantages, such as reducing transportation needs, which can make up a significant portion of fertilizer costs, especially for areas with poor infrastructure146. It may also offer an alternative to the existing capital-intensive, highly-concentrated world of fertilizer production, with its deeply entrenched interests and commitment to the Haber-Bosch status quo.

The American Farm Bureau Federation (AFBF), an agriculture industry lobbying group, is deeply committed to the fossil fuel-based method. The AFBF has a long history of working closely with the fossil fuel industry, supporting oil and gas domestic extraction (e.g. fracking), and even coal (which can be used as an input into fertilizer production91, and common in Chinese ammonia production92). This support has sometimes taken the form of outright climate change denial43. The Fertilizer Institute, a lobbying group for the fertilizer industry, is also closely connected to the fossil fuel industry, supporting expanded natural gas production, framing it explicitly as necessary for national food security and threatened by climate change policy148. This allegiance is likely only to grow stronger as pressure grows against the fossil fuel industry.

Questions of industry power aside, there is a lot of uncertainty about the viability of these alternative technologies2437145. A handful of companies cropped up a few years ago trying to produce net-zero emissions fertilizer by replacing Haber-Bosch fossil feedstocks with biomass and switching to renewable energy149, but none of the plants ever went through and the companies all went bankrupt150151. The available information seems to indicate mismanagement as a big contributor to these failures but it seems reasonable that the recent decline in natural gas prices—which has led to conventional nitrogen fertilizer production in the US to expand9293—is likely a large factor as well. The economics continue to favor fossil feedstocks.

Finally, changing the production process doesn't necessarily help address many of the other downstream effects of nitrogen fertilizer. What room is there then for innovating on fertilizer? Is there something with the productivity of synthetic fertilizer but with less of the harm?

"A Sewer is a Mistake"

A handful of social reformers lamented this partition, begging their fellow citizens to bridge the expanding chasm between city and farm. In his 1862 novel Les Misérables, Victor Hugo wrote, "There is no guano comparable in fertility with the detritus of a capital. A great city is the most mighty of dung-makers. Certain success would attend the experiment of employing the city to manure the plain. If our gold is manure, our manure, on the other hand, is gold."27

One option is to return to the older way of thinking: nutrients not as something to be produced but as something to be reused. The use of animal manure is a common example. If animal wastes can be used as fertilizer, then why not human waste too? 75 to 90% of nitrogen intake is excreted as urine54, which is also responsible for more than half of both the phosphorus and potassium in domestic wastewater (an estimated 16% of all mined phosphorus passes through the wastewater system) while constituting less than 1% of its volume152. In fact, recycling of human waste into fertilizer was once a key part of the agricultural nutrient cycle, until demand outpaced the recycled nutrients, more powerful fertilizers were introduced, and waste became seen as less of a resource and more of a problem (e.g. because of diseases) to be managed153154. Subsequent sanitation innovations responding to this conception of waste-as-problem such as flush toilets and more sophisticated sewage systems with the purpose of moving waste away from people also contributed to the disruption of these older "circular economies"68.

That such a system worked in the past means that we could try to restore it, and there is a renewed interest in recycling human waste into fertilizer155. Phosphorus can be recovered from wastewater through a variety of means, such as using sewage sludge, which has been used to generate high yields14. Or the nutrient-rich wastewater can be used directly as a medium to grow microalgae to treat the wastewater and harvest nitrogen and phosphorus to produce fertilizers156. We excrete almost all of the phosphorus we ingest68, and it can be recovered from wastewater69157. One study finds that some 40% of Australian phosphorus use can be fulfilled with a sewage recovery system96. The value of waste takes other forms too. Biochar is another soil amendment that can be produced from sewage sludge, which does not work well as a nitrogen fertilizer but can have other benefits such as increasing soil carbon sequestration and preventing nutrient leaching1581464158.

Unfortunately the valorization of wastewater for fertilizer is not without problems. The removal of nitrogen and phosphorus are both energy-intensive152 and the scalability of these systems are still uncertain68.

One key complication is waste contamination. Even in the past nightsoil required treatment to be safe54, and now we face a substantially more diverse set of chemicals, including pathogens, pharmaceuticals, cosmetics, and heavy metals from automotive and industrial runoff159115152. This is true even of animal wastes. Manure from concentrated animal feeding operations (CAFOs, i.e. "factory farms"), which, though massive in volume and thus an ample supply of nutrients, suffers from these problems of contamination160. This question of contamination has meant that these human waste-derived fertilizers aren't yet permitted for organic farms82. However, processes like vermicomposting, which uses earthworms to digest waste into more nutritious—potentially increasing some mineral concentration (phosphorus, for example) by up to 120% percent156, can reduce the content of some of these contaminants15914. However, the economics are again unfavorable: mining phosphate rock is cheaper than recovered phosphorus.

Reusing excreted nutrients rather than dumping them into the environment is a clear improvement to our current system, but the biggest limitation is that no nutrient recycling process will be able to recover all of the incoming nutrients. We couldn't rely on it alone: even a high-efficiency waste nutrient recycling system will still need to be supplemented.

Little Helpers: Biofertilizers

There is a class of fertilizers called "smart fertilizers" which usually refer to some slow-release mechanism so that nutrients are released more synchronously with plant uptake (i.e. released as plants need, instead of all at once). The mismatch of nutrient availability and plant need is a large factor in why fertilizer runoff and N2O emissions are as bad as they are; if plants used more of the nutrients then less would make it to waterways6447. This category encompasses a number of different methods, including organic wastes64, using slow-release casing, and biofertilizers.

Biofertilizers are fertilizers based on microbes; for example, nitrogen biofertilizers take advantage of existing nitrogen-fixing microorganisms such as cyanobacteria161162163164165. Like other fertilizers, they increase yields163164159166, and like the naturally-occurring instances of these microbes, biofertilizers may provide additional benefits such as pest protection161167163164168165, reduced soil erosion6165, additional growth beyond just that from the increased nutrient supply164159168165, and additional carbon sequestration165. The production process can involve fermentation (e.g. with yeast164168) or vermicomposting (i.e. the use of worms) from agricultural waste or wastewater16415914. These processes are accessible even in conditions of low capital (they are substantially cheaper than synthetic fertilizers169168) or without additional energy sources.

The use of such "plant probiotics"163 is not itself new163614170—for example, use of manures also introduces these microbes1646 and some are already commercialized on a small scale163. Environmental concerns around the Haber-Bosch process and other extractive fertilizer production processes, along with advances in biotechnology164, are drawing a renewed interest in commercializing the technology on a larger scale. There are however issues with scaling this to an industrial model: for example, transport and storage of living organisms is more complicated than relatively inert chemical fertilizers16116414.

However, again these biofertilizers are being developed within the context of the prevailing agrochemical industrial regime; companies like Bayer (who purchased Monsanto in 2018) and Nutrien (a $34-billion fertilizer company and second-largest producer of nitrogen fertilizer in the world171) are partnering with startups developing this biofertilizer technology, which is also focusing more on developing novel microbes rather than adapting existing ones167172.

The homemade biofertilizer Super Magro is one example of the more promising route biofertilizers could take. It consists of a simple, low-cost fermentation process and was released as an open source recipe for other farmers to use freely and augment169173.

What do we do?

Comparisons of these options are usually stated in terms of crop yield. Though some are still in development, these alternatives generally have yields that are worse than or only comparable to the prevailing synthetic fertilizer regime. There are cases where lower yields might be acceptable, such as with hydroponic urban agriculture where no food would be grown otherwise136. But in general, when viewed through crop yields, many analyses find these alternatives to be more environmentally damaging because of their lower yields.

Generally the emissions of agriculture are tied to yield17440; systems that are more intensive and produce higher yields end up having lower emissions per output. For example, organic systems have lower yield per hectare so they require more land to produce the same amount of food that an industrial farm would, which then leads to more deforestation and greater carbon emissions40. The worrying trade off between feeding people and reducing environmental impact seems to dissipate: industrial agriculture surprisingly gives us the best of both worlds. But this doesn't make any sense—we know that approach to agriculture has substantial harms.

This apparent paradox becomes resolvable by examining its key assumption: that feeding everyone necessarily requires absolutely maximizing agricultural productivity, which at present means widespread synthetic fertilizer use. This is the mainstream framing of the problem, advocated by organizations like the Bill and Melinda Gates Foundation175. That is, the core cause of inadequate nutrition and starvation is taken to be that we aren't growing enough food. Therefore we need powerful synthetic fertilizers to help us grow enough.

But are we really not growing enough food?

Food Waste

If we focus on food production we end up overlooking another important aspect: food waste. Consensus on exactly how much food is wasted is difficult because of lack of comprehensive data; estimates usually rely on surveys, looking through trash, or inferring through some other model. What is defined as food waste also varies. It can be simply the food that could have been eaten but is not (some definitions require that the food be eaten by a person, e.g. not a pet). or include food eaten in excess of nutritional requirements33176, or include that can no longer be sold, even if it still edible154, or still further be complicated by cultural differences, where food in one culinary tradition is considered waste in another154.

What is consistent across these definitions and data is that there is a lot of food wastage. Estimates of food waste range from 20% to 50%33177 (the wide variation is due to the differing definitions of food waste and outdated or lack of comprehensive data176), and appears to be growing19, even as agricultural output also grows178. In North America and Europe alone, where most of the waste occurs (twice as much as in Sub-Saharan Africa and South and Southeast Asia), this waste could "feed the world's hungry three times over"33.

Accounting for food waste dramatically increases the impact of each calorie of food consumed. Food waste accounts for as much as 38% of energy in the food system178; in the US this amounts to at least 2% of overall annual energy consumption179. This translates to a large amount of carbon emissions: in the UK, some 17 million tonnes CO2 emissions are attributable to food waste180; globally this figures to about 8 to 10% of GHG emissions (25-30% of food production's emissions)35. These impacts include not only the emissions from production, but also that food waste that ends up in landfill emits CO2 and methane19. For the UK, an estimated half of waste emissions comes from food waste33. Clearly if our concern is the environmental impact of food production, then food waste represents wasted harm. Reducing food waste not only reduces the impact per calorie from our existing food system, but makes a transition to a less harmful system more feasible in general because less overall output is required124.

Food waste is, on one end, usually framed as a technical problem for developing countries, and on the other, a behavioral one for developed countries. In developing countries, most food waste is attributed to lack of adequate technology and infrastructure. This might include poor transportation infrastructure that leads to food lost in transit, lack of well-sealed or cold storage leading to increased spoilage or infestation, crude harvesting equipment that leaves edible material behind33181154. In wealthier countries, more food waste occurs closer to the consumer18018117633, in part because improved production, storage, and distribution infrastructure reduces the share of waste at earlier points in the chain but also because higher incomes entail more wasteful habits182, such as an avoidance of "ugly" produce181183176182, excessive portion sizes181, and poor meal planning181176. Confusing, inconsistent, or outright arbitrary best-by/best-before/expiration date language is also an issue181184183, as are promotions encouraging larger purchases. There may also be legal obstacles preventing the distribution of excess food. The spatial organization of the global food system also drives waste: the more sprawled out a food system is, the farther food has to travel, which means more opportunities for food to be lost or spoiled along the way176.

With this understanding the policy recommendations are usually straightforward. For developing countries solutions focus on technology and infrastructure, which can be as simple as sealed storage drums176. Developed countries food waste policies focus around consumer education and behavior change18033181154 and removing hurdles to distributing surplus food35. For example, in France there is legislation from 2016 that fines groceries from throwing away food that's still good, which has resulted in more food donations and less food waste185. There are also community-based solidarity groups such as Food Not Bombs that try to ensure that food is being used to feed people.

But this analysis and its consequent policy solutions overlook how endemic these problems are to the political economy of food production—not just technical or behavioral problems—and how developing and developed food waste is deeply interconnected, where food waste is in fact structurally rational.

For example, consider the avoidance of "ugly" produce. It is not only consumer preference at point of purchase that leaves "ugly" produce behind but the decisions of retailers as well. For example, Walmart sets standards about carrot straightness and color, causing one supplier to discard as much as 30% of its carrots that do not meet these standards178. The EU used to have regulations specifying standards around the curvature of bananas154 among many other standards regarding other produce183. The cost of such standards are borne by smaller farmers, especially those in the Global South who have no influence over such decisions183 and often can't afford the more sophisticated harvesting technologies that reduce bruising176. In these cases the absence of demand leaves food to spoil154.

Yet, despite the narrative that producer waste is less of an issue in wealthier countries, similar dynamics are found. In California, for example, on average 24% of perfectly good food is left on fields unharvested4. Crop varieties, labor costs, and environmental factors all play a role, but so too do consumer preferences (leaving blemished food behind) and market prices—when prices are low, more may be left unharvested.4

Questions around food production really obscure two issues at play here: one is the question of whether or not we produce enough food to meet everyone's dietary needs, and another is whether or not that produced food is accessible to everyone. Looking more closely, our food production system blows past the first issue, and the problems of hunger and malnutrition have much less to do with sheer agricultural productivity and more to do with how food is production is governed and how food is distributed11412455186187. The need for the environmentally-damaging acceleration of agricultural output becomes murkier.

The Contradiction of Cheap Food

You could argue that productivity and access are actually closely linked. According to the laws of supply and demand, increasing productivity increases access because it will drive the prices of food down. The massive productivity increases in agriculture over the past century has seen an equally massive decrease in food prices, with many food commodities at 67-87% of their 1900 price182. It's hard to dispute that food prices are an important factor in food security; rising prices of food are not ever really thought of as a good sign precisely because it points to a decreased access to food.

With the hopes of avoiding high food prices and creating caloric abundance countries then would have an interest in developing agricultural productivity as much as possible, if not the farmers themselves. Yet then a crisis of overproduction occurs, in which a massive surplus of food does indeed drive prices down, but to the point where farmers are unable to recoup the costs of production.

A great deal of US agricultural policy over the past century is precisely about managing this overproduction. In the US, during downward prices and overproduction in the lead up to the Great Depression, the US had "breadlines knee-deep in wheat"49. The US government paid farmers leave land idle during the Great Depression to avoid overproduction and consequent price drops. Controversy around the policy in 1949—framed as paying farmers to not work—led to a different system establishing price floors, guaranteeing farmers a minimum price for their product, thus encouraging them to maximize use of their land188. This also meant that these downstream industries paid the farmers' incomes—and included conservation incentives which discouraged intensified exploitation of more and more land189. Some farmers support a return to these policies190191189, with a recognition that the drastically low prices contemporary subsidies enable also undermine the livelihoods of farmers in other countries—because cheap US exports set the price for other countries' agricultural products and enable a dependency on imports to the detriment of self-sufficient agriculture, which then allows agribusiness to buy up unused land to produce cash crops for export1192.

One of the consequences of overproduction is a squeeze where farmers can't recoup their costs of production. In the US, the 1970 price of corn was about $8 a bushel in today's dollars. Today it's about $4 a bushel. At the same time, the price of fertilizers have doubled193. Trends like this mean that, for many crops, prices have been below production costs for decades191. The subsidies which are meant to compensate for the lost income from these lower prices do not adequately fill the gap, with farm incomes—with subsidies included—seeing large declines191. US farm income has been decreasing8, down to 50% of 2013 income in 2018194. In Canada, farmer market net income—income after subtracting government payments, has been negative at least as of 2010195.

Debt has long played a big role in agriculture, helping to smooth out the staggered nature of farm expenditure and income—many expenses are upfront, but income isn't realized until after the harvest196. This difference is especially large in input-intensive agriculture, with the amount of chemicals used. A bad harvest can disrupt this cycle, leading to falling behind on loans and jeopardizing future access to capital196.

Against the backdrop of this price-cost squeeze, this debt can only accumulate. In the US, farm debt has been increasing since 2013194. At the same time the business of farm loans is expanding—the fertilizer company Nutrien expanded its farm loan program threefold to $6 billion197, further profiting off of a problem their expensive input regime created in the first place. Because incomes have been declining, farm loan delinquencies have hit a 9 year high198.

At the same time, these low prices, which are encouraged by subsidies and crop insurance guarantee an income to farmers, are to the benefit of downstream industries, such as livestock and snack/beverage/food processing companies, lowering the costs of their inputs. Effectively, taxpayers provide discounts for these businesses191, saving them some $3.9 billion per year199200190. With these low prices, the corn for a box of Kellog's Corn Flakes—which makes up 88% of the product—represents only 2.4% of the retail price51. These downstream companies—as well as the input companies, i.e. those that provide fertilizer, chemicals, seeds, machinery, etc—have seen record profits201 and provided massive payouts to shareholders:

As revealed in a recent report conducted by TUC and the High Pay Center on the behaviors of FTSE100 companies in the last 5 years, listed retailers paid over £2bn to shareholders in 2018, despite none of them being Living Wage Foundation accredited, while listed Food & drinks companies paid almost £14bn – more than they made in net profit (£12.7bn). To put that into perspective, just a tenth of this shareholder pay-out is enough to raise the wages of 1.9 million agriculture workers around the world to a living wage, for example those who produce food at the origin of the food chains that they coordinate as lead firms.20

In addition to cheaper inputs, overproduction gives these downstream companies more discretion over what they will and won't accept. For example, companies adopt stricter quality standards183 and retailers can return whatever they don't want or fails to sell in time, at no cost176, making farming even riskier than before.

For the farmer in this trap, there appear to only be a handful of options. One standard practice is to suppress the wages of farm workers, who are already among the most precarious group of workers. In the US, the H-2A visa system is used to keep wages low for migrant labor, to the point where a quarter to almost a third of such families are below the federal poverty line18202 and lowers wages for domestic farm labor as well203.

Another option is to expand markets for food. The problem with food is that it is relatively inelastic in demand; but one argument sees food waste against a backdrop of increasing food consumption in the US, with marketing that tries to enlarge the American appetite to offload an oversupply of cheap food19178. Farmers themselves do not typically have a role in this expansion; and most marketed food is in the form of snacks and processed foods of that nature; i.e. products of companies downstream from farming.

The other is to increase production. The low prices mean that productivity and volume is all the more important to make a living, which encourages environmentally harmful but output maximizing processes and the expansion of agricultural land18949. This only makes the problem worse—as farmers attempt to stay afloat by accelerating production, the markets for their crops end up flooded, further driving prices down.

The other is to overproduce to hedge against small harvests but withhold excess to keep prices high183. This is why we see for example perfectly good food left unharvested to rot. This behavior seems unreasonable—it is in many ways—but is rational, considering the farmer's position. When dealing with large retailers, the situation is such that if they fail to supply the agreed amount, they are dropped as a supplier176183, so the rational move is to overproduce just in case.

All of this is made easier to endure or even profit from with scale. The high costs of inputs and low prices of output are especially felt by smaller farms. We see this in US farming: almost 80% of small family farms' operating profit margin are in the "high risk level". In contrast, large-scale farms tend to be at a "low risk level"889. Larger farms gain better access to loans and better prices204. The current subsidy system exacerbates this, disproportionately benefiting larger farms who aren't struggling in the price-cost squeeze891887427205 and also tend to be more environmentally destructive79, even when accounting for the larger cropland8. Numbers range from 75-85% of farm subsidies going to only 10-15% of farms20686, and the 2018 Farm Bill has not really altered this dynamic207. The advantages of highly-capitalized, larger farms contributed to the consolidation of farming into fewer bigger farms204. In the US some 3 million farms went out of business in the postwar period208.

Even though dealing with agricultural overproduction has been a chronic problem of US agriculture, it came to have its usefulness in achieving US geopolitical goals. Harriet Friedmann192 and Philip McMichael187 outline a succession of "food regimes" where agricultural power is applied to capitalist expansion. Following World War II, the US instrumentalized its agricultural surplus by directing it towards contributing to European reconstruction and using food aid to slow the spread of communism78192187. Later, finding outlets for this surplus itself became a geopolitical goal. For example, developing markets for agricultural products through World Bank and IMF structural adjustment programs and WTO free-trade agreements in the Global South20978187210 . The development of such markets is itself partly accomplished by the surplus of US agricultural overproduction, which undermines local agriculture with low prices and disrupting food security. Rather than developing food self-sufficiency, the focus is on economic development to increase the affordability of imported food211192. In a strangely circular way, agriculture is no longer for growing food, but for growing commodities with which to buy food.

Part of these economic development programs is the consolidation of productive land and its re-orientation towards cash crops, further undermining food security212. This is the case in Zimbabwe, where the areas with the highest agricultural productivity also have the worst chronic malnutrition213. At the same time, the use of non-tariff barriers, such as sanitary and phytosanitary measures, effectively allow exporting nations to protect their own markets and make it so that only highly-capitalized operations can absorb the costs these additional measures require214.

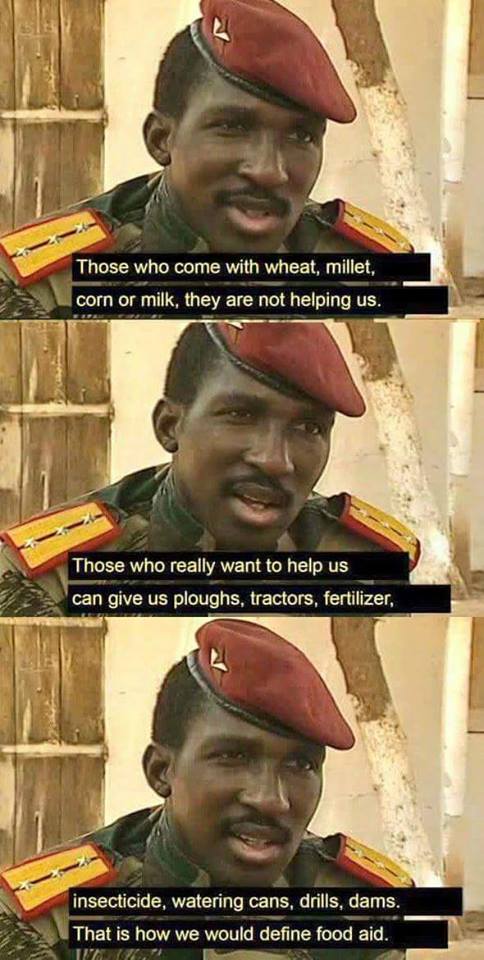

Figures like Thomas Sankara saw how food aid was exploitative and that self-sufficiency the route that preserved autonomy:

However, major organizations like the UN's FAO, World Bank, and the IMF and foundations like the Gates Foundation continue to press that low productivity is the main problem with food security and thus synthetic fertilizer, high-yielding varieties, and other Green Revolution technologies need greater levels of adoption in developing countries 215216.

Fanning the Flames

Mexico

The Green Revolution was first coordinated as a development strategy in Mexico. David Barkin describes how these programs saw an increase in dependency on food imports21778, even though Mexico has all it needs for food self-sufficiency218. Even worse, the expansion of the agricultural industry is in parallel with decreasing food consumption. These programs of productivity increases are driven with a focus on increasing export-oriented crops, crops for markets, rather than for improving self-sufficiency218205. Achieving the maximum productivity of a land isn't possible without the capital required for the purchase of synthetic fertilizer, pesticides, high-yielding variety seeds, and so on. Poorer farmers are displaced by those with that necessary capital, instead needing to find off-farm work to buy the imported food27218204219205.

This process of undermining self-sufficiency has the consequence of creating new markets for countries to sell their food surpluses to, and facilitates the process of proletarianization, creating a pool of cheap labor218215. It also serves other geopolitical goals as well. Barkin quotes one American diplomat commenting on Micronesia, who plainly put it:

If we examine our effects on the island culture, we see they have a real case. Before the U. S. arrived, the natives were self-sufficient, they picked their food off the trees or fished. Now, since we have them hooked on consumer goods, they'd starve without a can opener. Some of the radical independence leaders want to reverse this and develop the old self-sufficiency. We can't blame them. However, we can't leave. We need our military bases there. We have no choice. As some of my friends in Washington say, you've got to grab them by the balls, their minds and hearts will follow 218

Viewed this way, the Green Revolution in Mexico has a continuity with the colonization of Latin America, in which colonists monopolized the most fertile land, away from their function of sustaining the local population and instead towards producing profitable crops for export. Those who used to farm those lands are marginalized—if they weren't outright killed—towards less productive lands with greater water scarcity215.

Sub-Saharan Africa

Sub-Saharan Africa (SSA) is constant target for Green Revolution development220. Food imports in SSA have increased for decades219, and part of this is blamed on low productivity due to low fertilizer usage22160 and high levels of soil degradation and erosion222. Fertilizer is especially expensive in SSA, in large part due to high transportation costs221 (some of this might be addressed by recent development of domestic production capacity223) ; farmers there can pay as much as five times as a farmer in Europe6968. Because of the overapplication of fertilizers elsewhere, and the accompanying environmental harm, some call for increasing fertilizer use in SSA with a corresponding or larger decrease elsewhere (for example, 588kg nitrogen fertilizer/ha/year in northern China vs 7kg in Kenya)224.

The soil degradation which supposedly necessitates synthetic fertilizer in SSA is often attributed to poor management, a form of development rhetoric that frames peasants as ignorant about how best to work their land215205212222216. More recent degradation looks to have more to do with climatic and biophysical factors222, but the farming of soil eroded lands has more to do with a process of marginalization—the original farmers had developed their methods of effective soil conservation222213—where larger landholders, European colonists, and transnational corporations monopolize the highest quality land and displace other farmers to smaller and smaller holdings, where the land has to be worked harder to meet the same needs205204213. In some cases, it is these transnational companies that overwork the soil, moving on to other fertile land once it's been exhausted205.

The increased adoption of synthetic fertilizers might only accelerate this soil degradation. One study looked at Zambia's input subsidy program and found that the introduction of fertilizer subsidy programs does in fact increase fertilizer usage, which subsequently leads to a reduction in traditional low- or no-input fertility-management methods such as fallowing and intercropping and an increase in continuous cropping—that is, a shift towards more intensive agriculture that disrupts natural cycles of soil restoration38225226. This shift in turn contributes to reduced levels of soil organic matter, which is important for maintaining soil health, which decreases the soil quality. Though there was a short-term boost in productivity, the resulting soil degradation could lead to a net decline in the long run which can only be delayed by further increasing the usage of expensive fertilizer225.

A review of eighty studies on fertilizer subsidies in Africa found that these subsidies are more likely to go to households with larger landholdings, greater wealth, and/or connections to the government, even if programs are ostensibly deliberately designed to avoid such outcomes224227. Fertilizer use stops once subsidies are withdrawn, so farmers are left with degraded soil and unable to afford the now necessary inputs to stabilize yields. Ultimately, productivity increases peter out after a few years, and there are only negligible effects on improving self-sufficiency, reducing import dependency, and alleviating poverty227.

These subsidies are expensive: African governments devote a large part of their budgets to these input subsidies, some $1 billion a year225220. For Zambia, as much as 35% of the country's agricultural budget was going towards fertilizer subsidies224. At one point, 9% of the Malawi's entire budget went to these subsidies212. This is more or less a transfer of public funds to fertilizer producers like Bayer/Monsanto212220.

As high as these subsidies are, they can't compete with those in wealthier countries. OECD countries provide subsidies to their own farmers of about $1 billion per day228, and such financial support encompassed 30% of all farm receipts from 2003-2005178.

This is compounded by the fact that Green Revolution technologies often come as a package. Specific varieties of crops are necessary to achieve the full yield improvements of synthetic fertilizer use (this varieties are usually intellectual property), and increased sensitivity to pests from fertilizer user necessitates the use of pesticides38119229. Higher productivity can also require more water, beyond what the water cycle normally provides, so irrigation also increases27.

In the case of Malawi, the suggested alternative to fertilizer subsidies is addressing underlying issues:

policies aimed at relieving the key constraints that our model tries to capture—such as land, credit and labor availability—might go further towards addressing the bottlenecks to on-farm intensification that smallholders in Malawi encounter, and could lead to the adoption of better on-farm practices that reverse soil degradation and improve the long-term viability of small-holder agriculture.226

Interestingly, many countries in SSA are agricultural exporters while being net food importers. That is, SSA's dependency on food imports is in part due to agricultural land and resources being directed for export-oriented production (in a form reminiscent of their colonial histories230) rather than supplying the country's food needs—"even a small substitution in their agricultural export products into food would eliminate their deficits"210.

This push towards agricultural production for export is in part driven by this need for income to purchase food imports and in part to manage national debts. The 1990s, Malawi, under the advisement of USAID and the tobacco industry, re-oriented production from food crops to tobacco crops with the goal of paying off its debt, with part of the rationale being that revenue from tobacco exports would be plenty to purchase the food needed to account for reduced production. The consequence, however, was that the ability for Malawian farmers to feed themselves was no longer dependent on their own agricultural ability, but rather on market prices for tobacco—which fell by 37% in 2009, and made it difficult for farmers to feed themselves212.

Sometimes this shift to export-oriented cash crops is further frustrated by the surpluses of major producers. Where some countries had advantages in producing things like sugar, the rise of processed foods meant fewer people were consuming sugar directly, instead mediated by processed foods—and as such, that opened up the possibility of sugar substitutes, i.e. HFCS, which then undermined countries with the comparative advantage for sugar production, as corn was overproduced and very cheap. Thus these countries were in a double-bind: food import dependency plus declining revenues from crop exports192.

These dynamics appear elsewhere too. In Nepal, though fertilizer subsidies do tend to increase crop yields, environmental problems are exacerbated231, and the subsidies may disproportionately benefit farmers who are more connected to the state and/or wealthier to begin with231221. In India, initial bumper crops from subsidized fertilizer application then gave way to crop failures and soil depletion38232, saw a move away from traditional techniques like crop rotation towards continuous cultivation of export-oriented crops38, and increased stratification between farmers who could afford the Green Revolution technologies and those who could not afford to stay on their land38205. Vandana Shiva describes deeper social shifts these technologies fomented:

The shift from internal to externally purchased inputs did not merely change ecological processes of agriculture. It also changed the structure of social and political relationships, from those based on mutual (though asymmetric) obligations—within the village to relations of each cultivator directly with banks, seed and fertilizer agencies, food procurement agencies, and electricity and irrigation organisations.38

Hand to Mouth

Content warning: There is some mention of suicide in this section.

This high-input and market-dependent form of agriculture puts farmers into a double-bind: they are both exposed to the impacts of fertilizer prices on their own production costs but also on the costs of their food1569. One study finds that increasing fertilizer prices would decrease crop yields by up to 13%7, reducing income.

In addition to the typical weather and climatic risks of agriculture, they are now exposed to the volatility of export restrictions, financial speculation, as well as the weather and climactic risks of wherever they import food from2334815! Risk has magnified, rather than diffused, through this system23415233212.

Arrangements such as contract farming integrate smaller farmers into global agricultural industrial supply chains through arrangements that ensure buyers (with substantially more market power, who do not need contribute to the costs of production, also ways around labor laws) bear little of this risk78212, much like the increasing use of contract workers throughout other industries.

Scalability becomes absolutely vital to generate enough revenue to remain solvent. Farmers with lower yields—i.e. subsistence farmers and those with less access to the capital necessary for scaling up—are the ones at the greatest risk19882235. Agricultural finance recognizes this, compounding the effect by giving larger farms cheaper loans, along with other advantages such as more opportunities to exploit cheap labor204. The trap is intensified due to the declining effectiveness of inputs, which have the effect of degrading the soil232. The general necessity of absolutely maximizing output for a piece of land encourages the extractivist model of agriculture, in which the soil is "mined", with nutrients pulled out faster than they can be naturally replaced78; sometimes even faster than they can be "unnaturally" replaced. In India, farmers require three times the fertilizer to achieve the same yields as they used to, while the prices for those crops remain the same or decline232. Insolvency is basically inevitable.

When farmers try to re-integrate traditional practices or develop systems parallel to conventional inputs, they are shut down. In Zimbabwe, farmers could not sell their own seeds as alternatives to the engineered hybrids—the practice was made illegal213.

What are the consequences of this precarity and lack of control over one's life? One may be an increase in farmer suicides21—farming is the profession most at-risk for suicide236. The farmers most likely to commit suicide are those who have marginal landholdings, focus on cash crops, and are indebted, which are exacerbated by all of the dynamics described above213821652.

Another consequence of this precarity is the increasing concentration of agricultural land and production.

Land Grabbing

Farmers, now unable to support themselves on the land, have no choice but to sell it. Agricultural land concentrates into fewer and fewer hands, contiguous with the dispossessions that occurred with colonization. Here, however, these dispossessions result from a mix of economic factors, driven by overproduction, and legal means, with post-colonial states, under the direction of development organizations like the US's Millennium Challenge Corporation, often facilitating the process by unilaterally replacing traditional land right systems with ones more amenable to foreign investment and ownership212218237.