Mobile Trends

For some time now, there’s been a great deal of buzz about “mobile”. Despite the hubbub surrounding mobile, it seems that a lot of applications of “mobile” have just been a direct translation of web/desktop products to smartphones, which doesn’t take full advantage of the platform’s potential.

At the highest level, mobile allows us to bring the interconnectedness we’ve come to love, but that’s confined to computers (in the laptop/desktop sense), to the rest of the world. Mobile technologies enable us to create a digital layer upon the physical world, making tangible the intangible information embedded in all physical objects. Your smartphone will become your ambassador to this digital world, communicating with this digital layer and translating it into useful, human-comprehendable output.

Here are the broader mobile trends and potentials that I’ve noticed:

Literal Input

With the increase of software pseudo-intelligence and the proliferation of alternate input devices on mobile devices (most notably the camera, which of course isn’t really all that new, but also other sensors), mobile devices (and computers in general) will be capable of understanding increasingly literal input.

As it stands now, there exists a gap between our information needs and the queries we end up forming to (hopefully) express those needs in a way a computer can understand. With computers becoming better at “doing what humans are good at”, this gap will shrink. Computers will be able to digest direct and literal input, creating an altogether more fluid and enjoyable mobile experience. In fact, we’ve already been seeing this for awhile with technologies such as Google Goggles and Word Lens (though current technology often leaves something to be desired). With Google Goggles, for instance, instead of having to translate a physical object into a manually-inputted word query into a search engine, the application can directly “understand” the visual input of the object itself.

Context-Aware Automation/Prediction

With increasingly “intelligent” software - perhaps fruits of the latest “big data”/data science/etc craze - we can expect these interactions to require less and less of our personal guidance and management, and for them to become more automated and predictive.

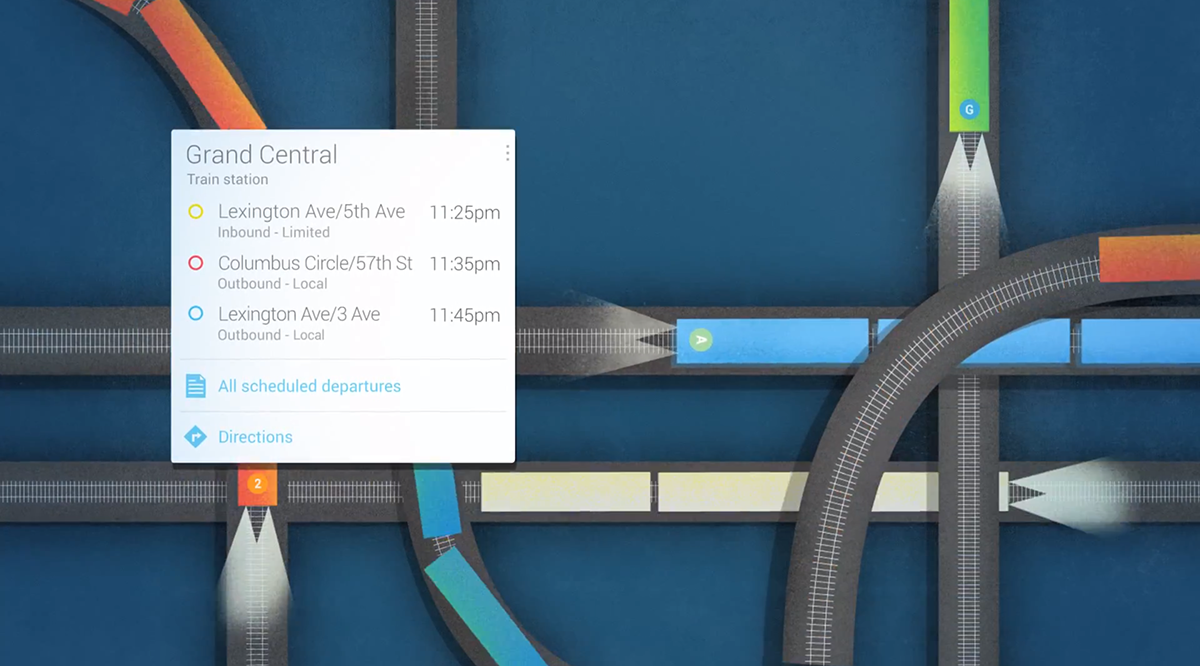

This is something that Google is already tackling with Google Now, which is capable of learning your behaviors and tries to predict what information you need based on your current context.

The implications of such technology are pretty huge, perhaps we’ll see some realization of “machine augmented cognition” where mobile devices function like an auxiliary brain, remembering things for us, handling small tasks for us automatically, and knowing when to do all this without needing our managing or guidance.

It’s worth noting that context-aware automation & prediction leapfrogs over literal input because you are presented with your information before you’ve even formed your information need!

Device modularity through accessory ecosystems

Mobile is not a single-device experience. Right now the current mobile experience is pretty centralized around, if not exclusively focused on, your smartphone. But we’ll see more external (and wearable) sensors that extend the capabilities of our smartphones. Functioning as inputs, our smartphones will communicate with these sensors and translate their streams into something useful and informative to a person. This is already emerging with personal health monitoring devices such as Jawbone UP, Nike+ FuelBand, FitBit, etc.

The adoption of these sensors reflects how people are becoming more and more interested in turning to the objective reality that data describes as a means of informing personal betterment and self-management. Is this indicative of a broader “computer infallibility” trend where we form greater and greater distrust in our own subjective experiences and evaluations, and instead fill this void with an increasing reliance on the objective “truth” (perhaps more appropriately “fact” but really just “data”) of computers?

In general though, such a network of sensors allows us to automate what used to rely on manual input and/or memory to a level of granularity that we could not hope to realistically achieve on our own. We wouldn’t even necessarily need to ever be exposed to the raw data but instead just have the data pre-processed into what’s most relevant to us, such as broader trends or simply suggestions on what to do next.

These sensors could also empower “invisible computing”. Currently, the process of documenting an experience necessarily interrupts the experience (unless documenting the experience is the experience which certainly can be the case - think “Instagram”), but with such a network of sensors this documentation can happen subtly in the background. This allows us to return to the pre-smartphones-everywhere experience of experiences where experiencing the experience meant just experiencing it.

Increasingly layperson-friendly computer interaction paradigms

These other trends lend to a broader one of increasingly layperson-friendly computer interaction paradigms (and this isn’t really contained to mobile). Computing interaction could more directly correlate with physical metaphors/analogues that most people find more intuitive. We’ll have to make less special considerations when using computers, such as having to edit our queries so that a computer may understand them. Things will just work as the average person would expect them to.

For instance, file transfer amongst computers typically requires going through a router. To people unfamiliar with computers, that doesn’t really sound logical - if a computer can connect with things wirelessly, why not to another computer? Why does it have to go through something else to get there? Less technical folk can take it on faith that it just has to work that way, but that’s never satisfactory for anyone.

With the proliferation of WiFi Direct, however, computers can directly connect with each other, in a way that makes a lot more sense to the layperson. Adding an interaction that allows “moving” physical gestures (such as “pushing” the file to another computer) makes the whole experience that more intuitive.

Of course, as it typically is with technology, there’s a whole mess of things that could go wrong. There’s always potentially disruptive implications of a new technology or usage pattern at a broader scale. For instance, these wearable sensors have controversial implications in a society where ubiquitous recording is often treated as a crime or as an infringement upon rights (see the EyeTap McDonalds scandal).

With wearable sensors there’s also the potential for cultural and social resistance (think of the negative reputation of Bluetooth headsets), but I haven’t really seen that myself with these latest health monitoring devices.