Friends of Tarok, Lord of Guac Chapter I: Party Fortress: Technical Post-Mortem

Last weekend Fei, Dan, and I put on our first Party Fortress party.

Fei and I have been working with social simulation for awhile now, starting with our Humans of Simulated New York project from a year ago.

Over the past month or two we’ve been working on a web system, “highrise” to simulate building social dynamics.

The goal of the tool is to be able to layout buildings and specify the behaviors of its occupants, so as to see how they interact with each other and the environment, and how each of those interactions influences the others.

Beyond its practical functionality (it still has a ways to go), highrise is part of an ongoing interest in simulation and cybernetics. Simulation is an existing practice that does not receive as much visibility as AI but can be just as problematic. It seems inevitable that it will become the next contested space of technological power.

highrise is partly a continuation of our work with using simulation for speculation, but whereas our last project looked at the scale of a city economy, here we’re using the scale of a gathering of 10-20 people. The inspiration for the project was hearing Dan’s ideas for parties, which are in many ways interesting social games. By arranging a party in a peculiar way, either spatially or socially, what new kinds of interactions or relationships emerge? Better yet, which interactions or relationships that we’ve forgotten start to return? What relationships that we’ve mythologized can (re)emerge in earnest?

highrise was the engine for us to start exploring this, which manifested in Party Fortress.

I’ll talk a bit about how highrise was designed and implemented and then the living prototype Party Fortress.

Buildings: Floors and Stairs

First we needed a way to specify a building. We started by reducing a “building” to just floors and stairs, so we needed to develop a way to layout a building by specifying floor plans and linking them up with stairs.

We wanted floor plans to be easily specified without code, so developing some simple text structure seemed like a good approach. The first version of this was to simply use numbers:

[

[[2,2,2,2,2,2,2],

[2,1,1,1,1,1,2],

[2,1,1,1,1,1,2],

[2,2,1,1,1,2,2]],

[[2,2,2,2,2,2,2],

[2,1,1,1,1,1,2],

[2,1,1,1,1,1,2],

[2,2,0,0,0,2,2]]

]

Here 0 is empty space, 1 is walkable, and 2 is an obstacle. In the example above, each 2D array is a floor, so the complete 3D array represents the building. Beyond one floor it gets a tad confusing to see them stacked up like that, but this may be an unavoidable limitation of trying to represent a 3D structure in text.

Note that even though we can specify multiple floors, we don’t have any way to specify how they connect. We haven’t yet figured out a good way of representing staircases in this text format, so for now they are explicitly added and positioned in code.

Objects

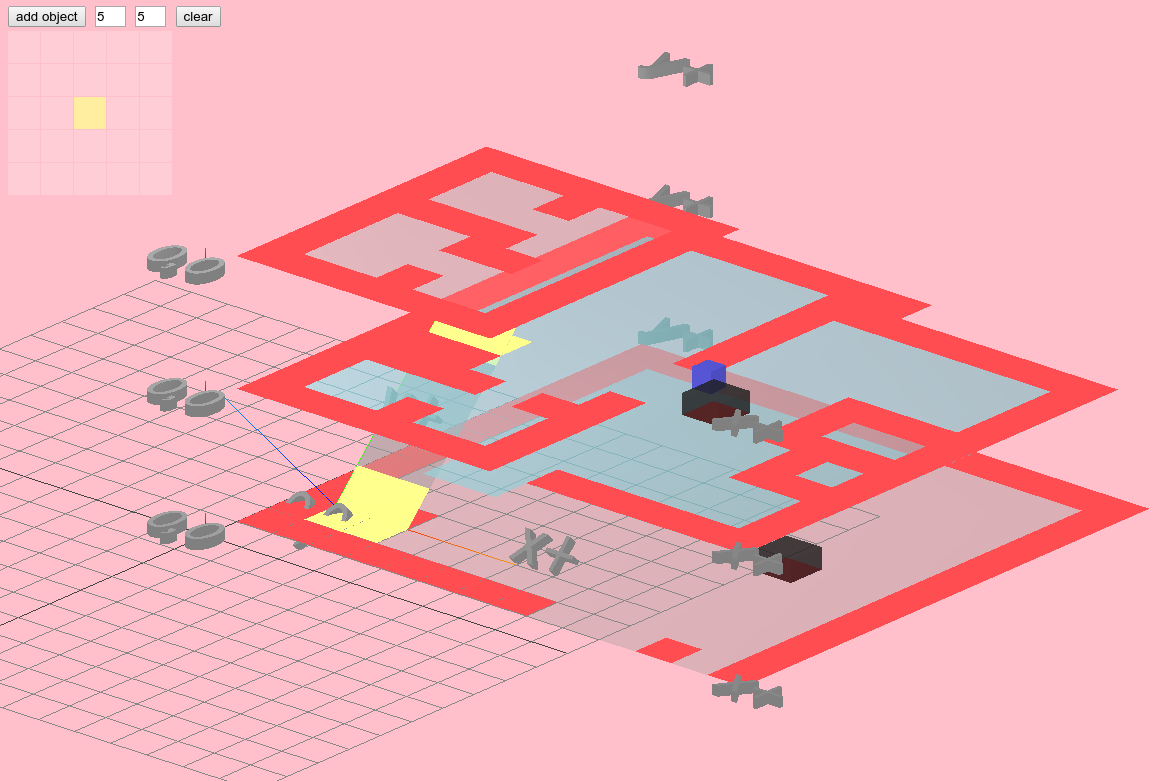

A floor plan isn’t enough to properly represent a building’s interior - we also needed a system for specifying and placing arbitrary objects with arbitrary properties. To this end we put together an “object designer”, depicted below in the upper-left hand corner.

The object designer is used to specify the footprint of an object, which can then be placed in the building. When an object is clicked on, you can specify any tags and/or key-value pairs as properties for the object (in the upper-right hand corner), which agents can later query to make decisions (e.g. find all objects tagged food or toilet).

Objects can be moved around and their properties can be edited while the simulation runs, so you can experiment with different layouts and object configurations on-the-fly.

Expanding the floor plan syntax

It gets annoying to need to create objects by hand in the UI when it’s likely you’d want to specify them along with the floor plan. We expanded the floor plan syntax so that, in addition to specifying empty/walkable/obstacle spaces (in the new syntax, these are '-', ' ', and '#' respectively), you can also specify other arbitrary values, e.g. A, B, ☭, etc, and these values can be associated with object properties.

Now a floor plan might look like this:

[

"#.#.#. .#.#.#",

"#. .#. . .#",

"#. .#.#.#. .#",

"#.A. . . . .#",

"#. .#.#.#.#.#",

"-.-.-.-.-.-.-"

]

And when initializing the simulation world, you can specify what A is with an object like this:

{ "A": { "tags": ["food"], "props": { "tastiness": 10 } } }

This still isn’t as ergonomic as it could be, so it’s something we’re looking to improve. We’d like it so that these object ids can be re-used throughout the floor plan, e.g:

[

"#.#.#. .#.#.#",

"#. .#. .A.#",

"#. .#.#.#. .#",

"#.A. . .A. .#",

"#. .#.#.#.#.#",

"-.-.-.-.-.-.-"

]

so that if there are identical objects they don’t need to be repeated. Where this becomes tricky is if we have objects of footprints larger than one space, e.g.:

[

"#.#.#. .#.#.#",

"#. .#. . .#",

"#. .#.#.#. .#",

"#.A.A. . . .#",

"#.A.#.#.#.#.#",

"-.-.-.-.-.-.-"

]

Do we have three adjacent but distinct A objects or one contiguous one?

This ergonomics problem, in addition to the stair problem mentioned earlier, means there’s still a bit of work needed on this part.

(Spatial) Agents

The building functionality is pretty straightforward. Where things start to teeter (and get more interesting) is with designing the agent framework, which is used to specify the inhabitants of the building and how they behave.

It’s hard to anticipate what behaviors one might want to model, so the design of the framework has flexibility as its first priority.

There was the additional challenge of these agents being spatial; my experience with agent-based models has always hand-waved physical constraints out of the problem. Agents would decide on an action and it would be taken for granted that they’d execute it immediately. But when dealing with what is essentially an architectural simulation, we needed to consider that an agent may decide to do something and need to travel to a target before they can act on their decision.

So we needed to design the base Agent class so that when a user implements it, they can easily implement whatever behavior they want to simulate.

Decision making

The first key component is how agents make decisions.

The base agent code is here, but here’s a quick overview of this structure:

- There are two state update methods:

entropy, which represents the constant state changes that occur every frame, regardless of what action an agent takes. For example, every frame agents get a bit more hungry, a bit more tired, a bit more thirst, etc.successor, which returns the new state resulting from taking a specific action. This is applied only when the agent reaches its target. For example, if my action iseat, I can’t actuallyeatand decrease my hunger state until I get to the food.

actions, which returns possible actions given a state. E.g. if there’s no food at the party, then I can’teatutility, which computes the utility for a new state given an old state. For example, if I’m really hungry now and I eat, the resulting state has lower hunger, which is a good thing, so some positive utility results.- Agents use this utility function to decide what action to take. They can either deterministically choose the action which maximizes their utility, or sample a distribution of actions with probabilities derived from their utilities (i.e. such that the highest-utility action is most likely, but not a sure bet).

- This method also takes an optional

expectedparameter to distinguish the use of this method for deciding on the action and for actually computing the action’s resulting utility. In the former (deciding), the agent’s expected utility from an action may not actually reflect the true result of the action. If I’m hungry, I may decide to eat a sandwich thinking it will satisfy my hunger. But upon eating it, I might find that it actually wasn’t that filling.

execute, which executes an action, returning the modified state and other side effects, e.g. doing something to the environment.

Movement

Agents also can have an associated Avatar which is their representation in the 3D world. You can hook into this to move the agent and know when it’s reached it’s destination.

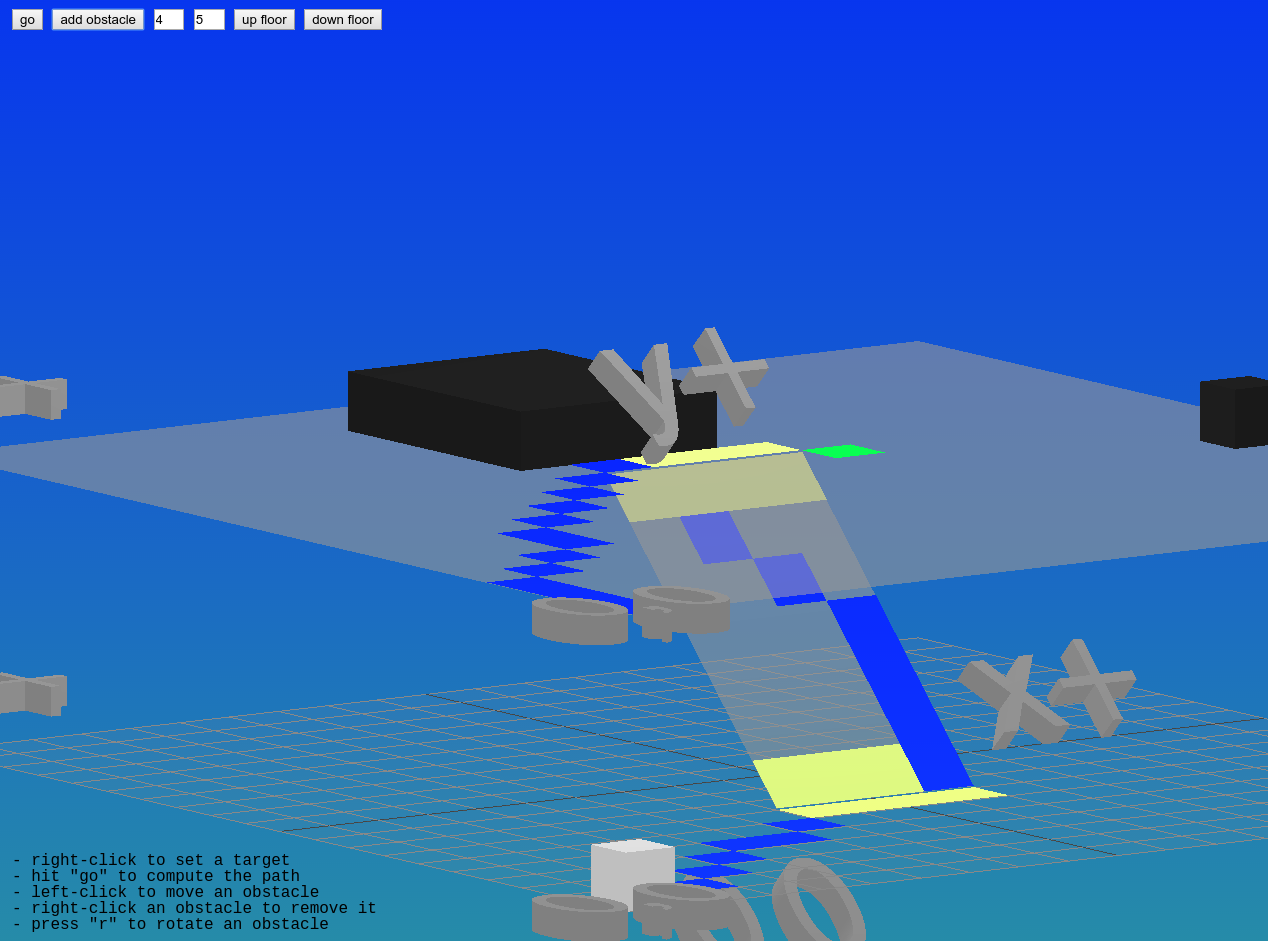

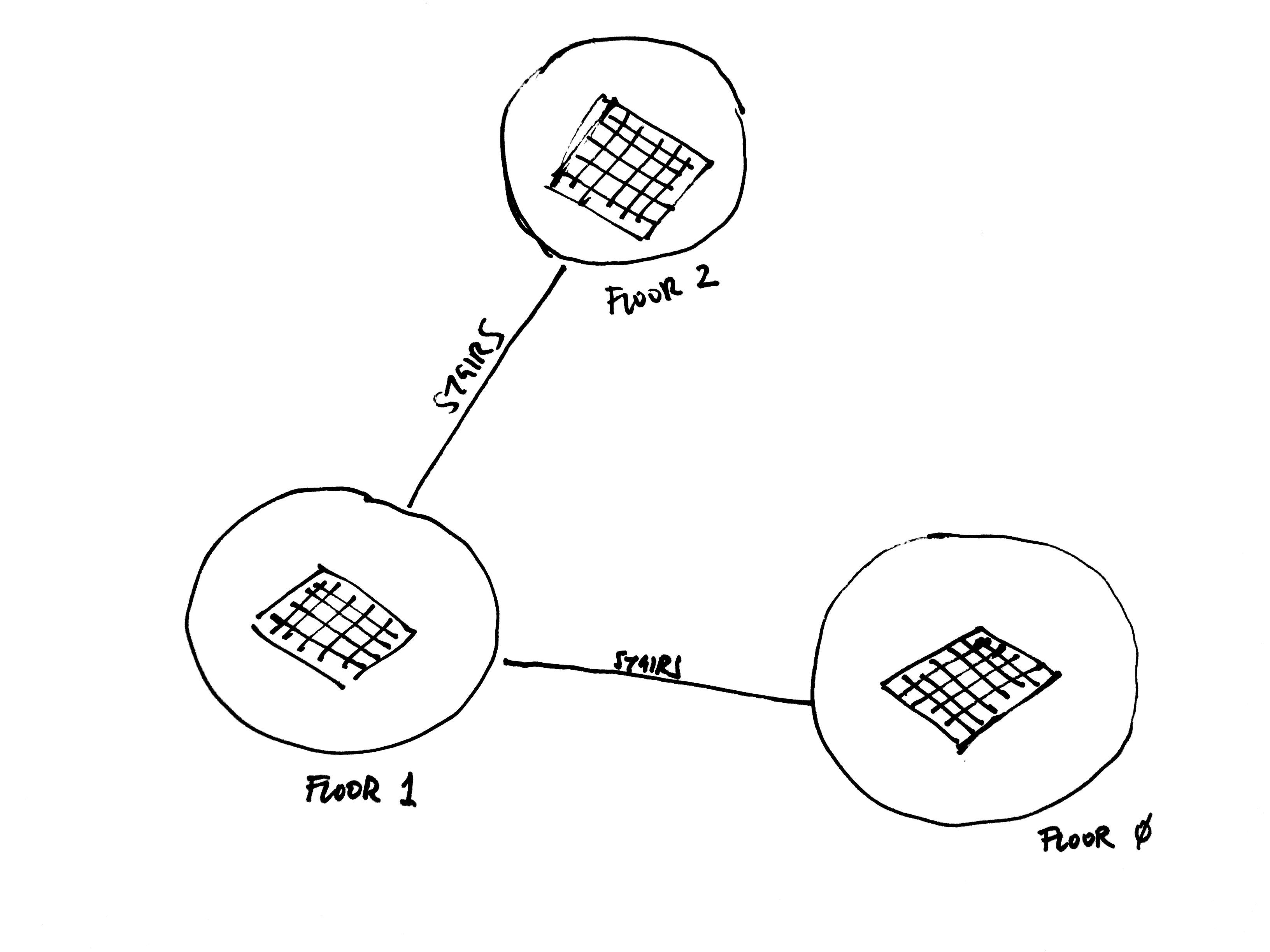

Multi-floor movement was handled by standard A* pathfinding:

Each floor is represented as a grid, and the layout of the building is represented as a network where each node is a floor and edges are staircases. When an agent wants to move to a position that’s on another floor, they first generate a route through this building network to figure out which floors they need to go through, trying to minimize overall distance. Then, for each floor, they find the path to the closest stairs and go to the next floor until they reach their target.

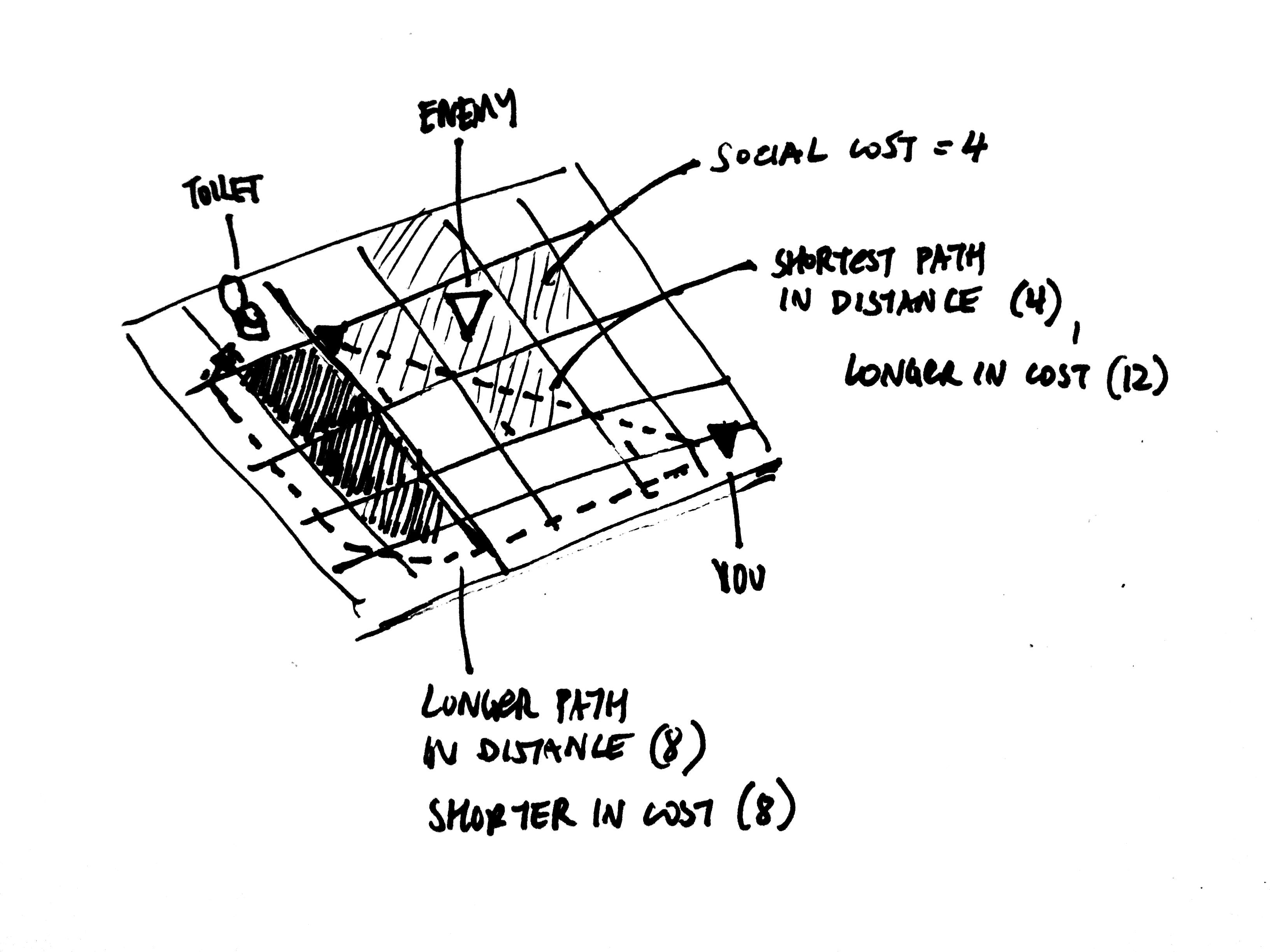

There are some improvements that I’d really like to make to the pathfinding system. Currently each position is weighted the same, but it’d be great if we held different position weights for each individual agent. With this we’d be able to represent, for instance, subjective social costs of spaces. For example, I need to go to the bathroom. Normally I’d take the quickest path there but now there’s someone I don’t want to talk there. Thus the movement cost of those positions around that person are higher to me than they are to others (assuming everyone else doesn’t mind them), so I’d take a path which is longer in terms of physical distance, but less imposing in terms of overall cost when considering this social aspect.

Those are the important bits of the agent part. When we used highrise for Party Fortress (more on that below), this was enough to support all the behaviors we needed.

Party Fortress

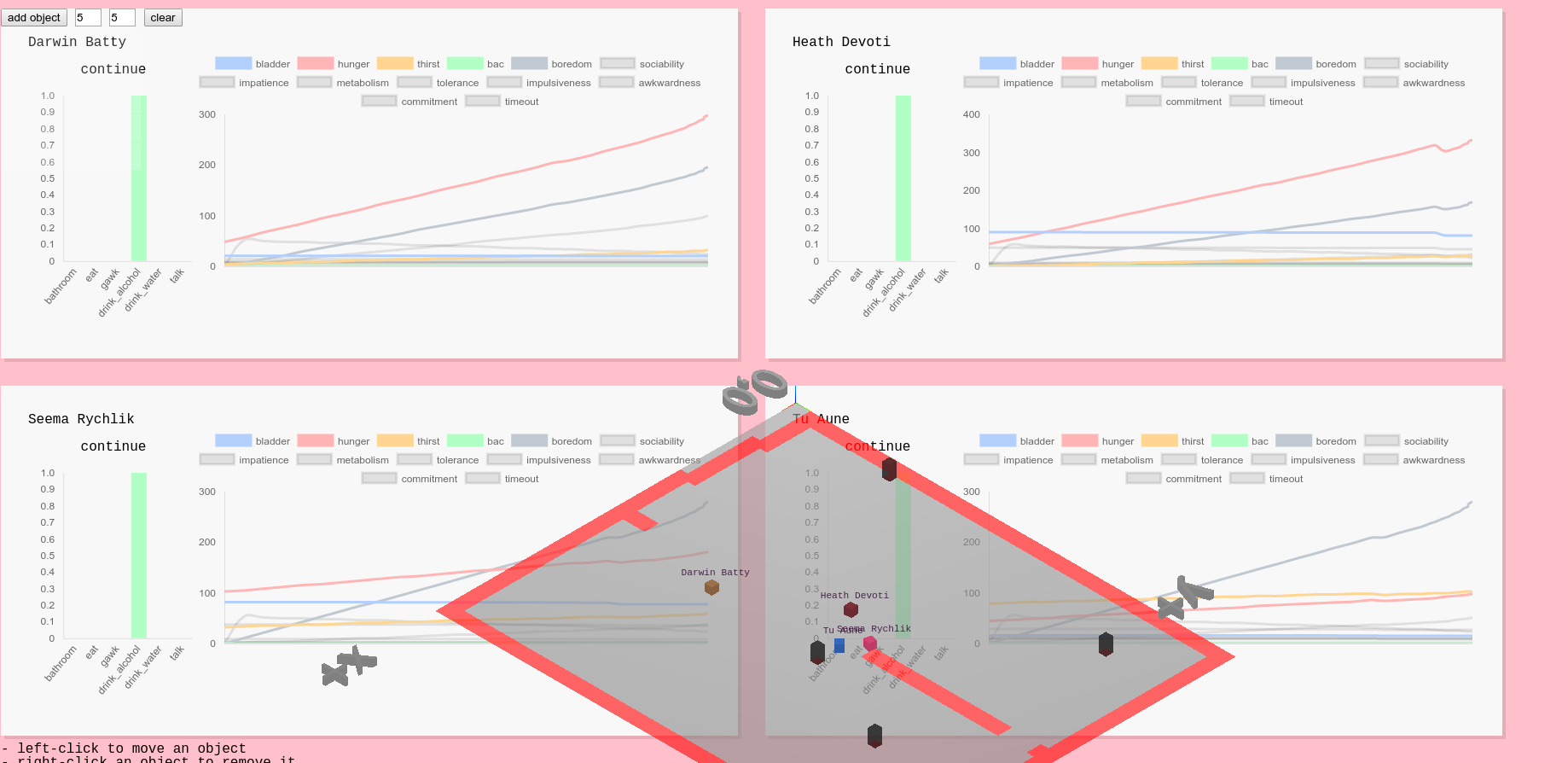

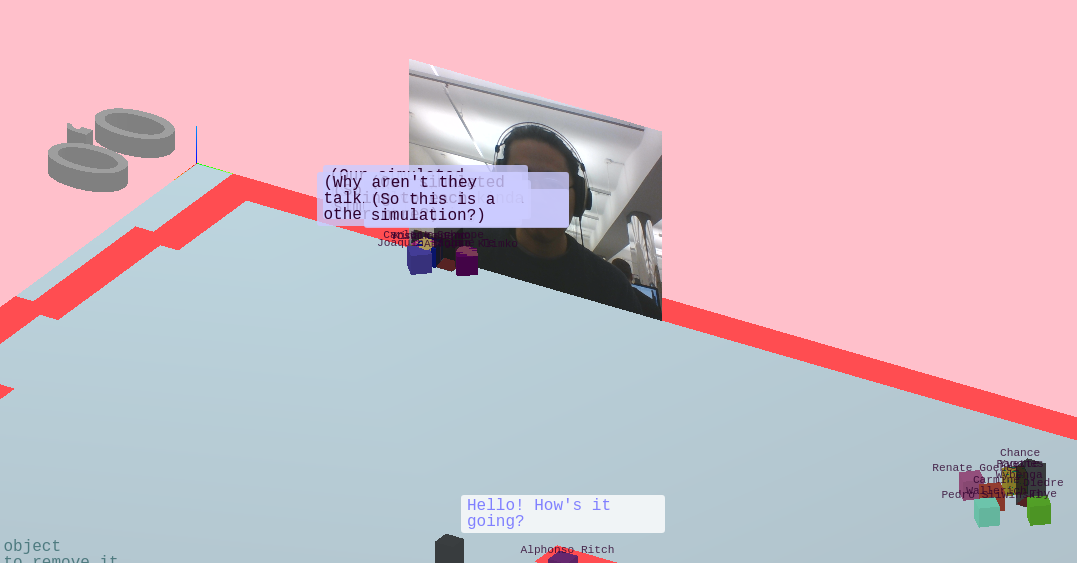

Since the original inspiration for highrise was parties we wanted to throw a party to prototype the tool. This culminated in a small gathering, “Party Fortress” (named after Dwarf Fortress), where we ran a simulated party in parallel to the actual party, projected onto a wall.

Behaviors

We wanted to start by simulating a “minimum viable party” (MVP), so the set of actions in Party Fortress are limited, but essential for partying. This includes: going to the bathroom, talking, drinking alcohol, drinking water, and eating.

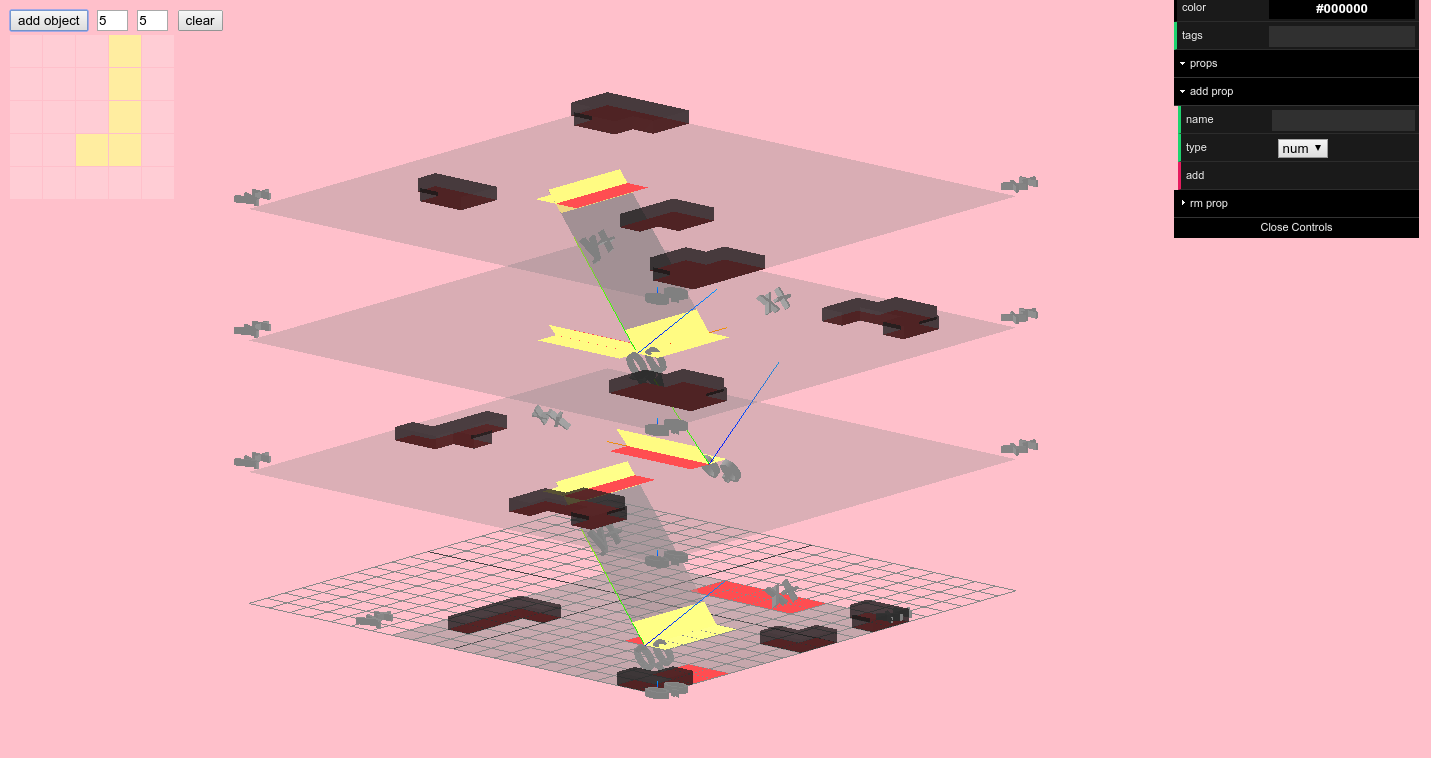

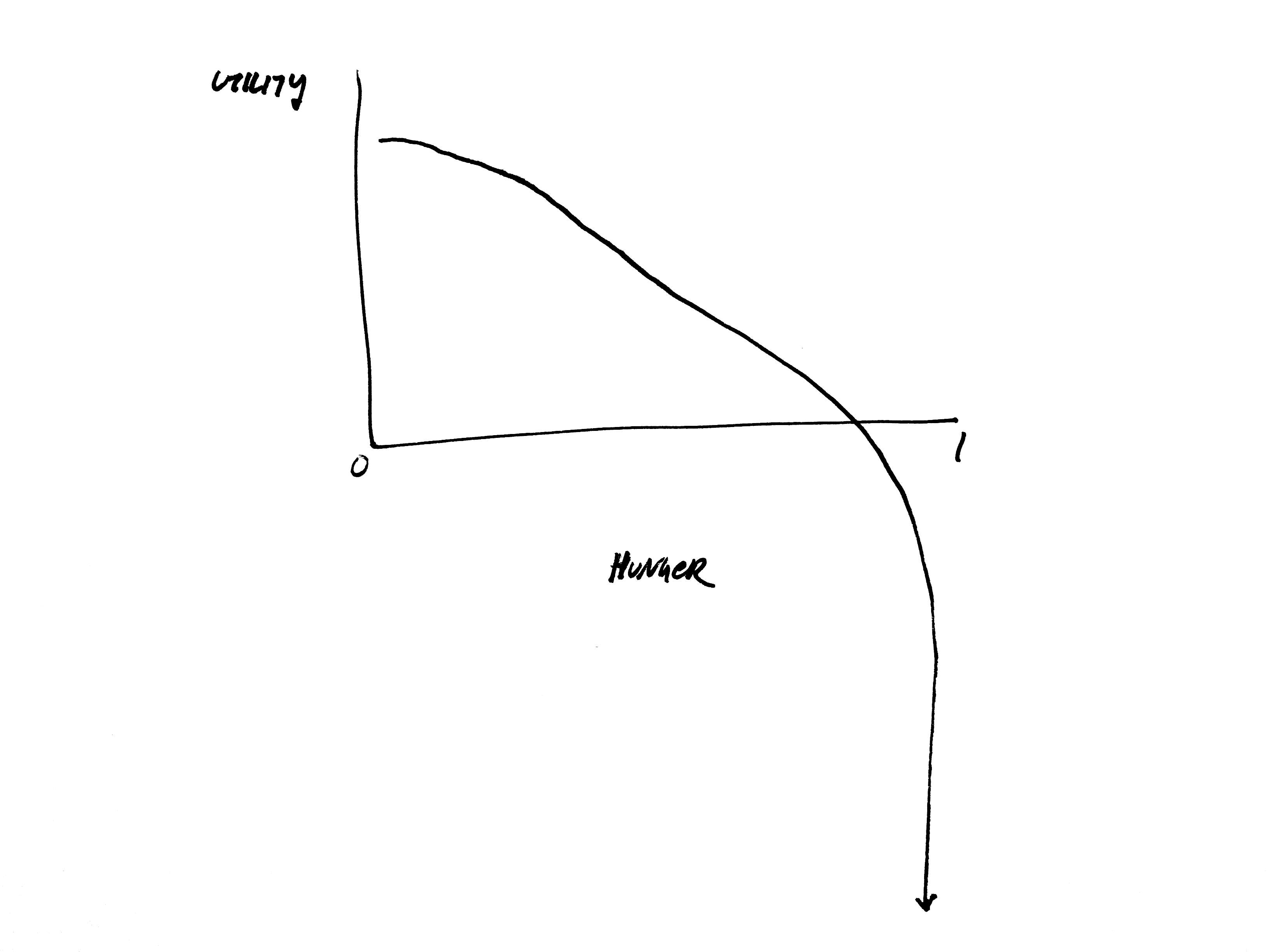

The key to generating plausible agent behavior is the design of utility functions. Generally you want your utility functions to capture the details of whatever phenomena you’re describing (this polynomial designer tool was developed to help us with this).

For example, consider hunger: when hunger is 0, utility should be pretty high. As you get hungry, utility starts to decrease. If you get too hungry, you die. So, assuming that our agents don’t want to die (every simulation involves assumptions), we’d want our hunger utility function to asymptote to negative infinity as hunger increases. Since agents use this utility to decide what to do, if they are really, really hungry they will prioritize eating above all else since that will have the largest positive impact on their utility.

So we spent a lot of time calibrating these functions. The more actions and state variables you add, the more complex this potentially gets, and makes calibration much harder. We’re still trying to figure out a way to make this a more streamlined process involving less trial-and-error, but one helpful feature was visualizing agents’ states over time:

Commitment

One challenge with spatial agents is that as they are moving to their destination, they may suddenly decide to do something else. Then, on the way to that new target, they again may decide to something else. So agents can get stuck in this fickleness and never actually accomplish anything.

To work around this we incorporated a commitment variable for each agent. It feels a bit hacky, but basically when an agent decides to do something, they have some resolve to stick with it unless some other action becomes overwhelmingly more important. Technically this works out to mean that whatever action an agent does has its utility artificially inflated (so it’s more appealing to continue doing it) until they finally execute it or the commitment wears off. This could also be called stubbornness.

Conversation

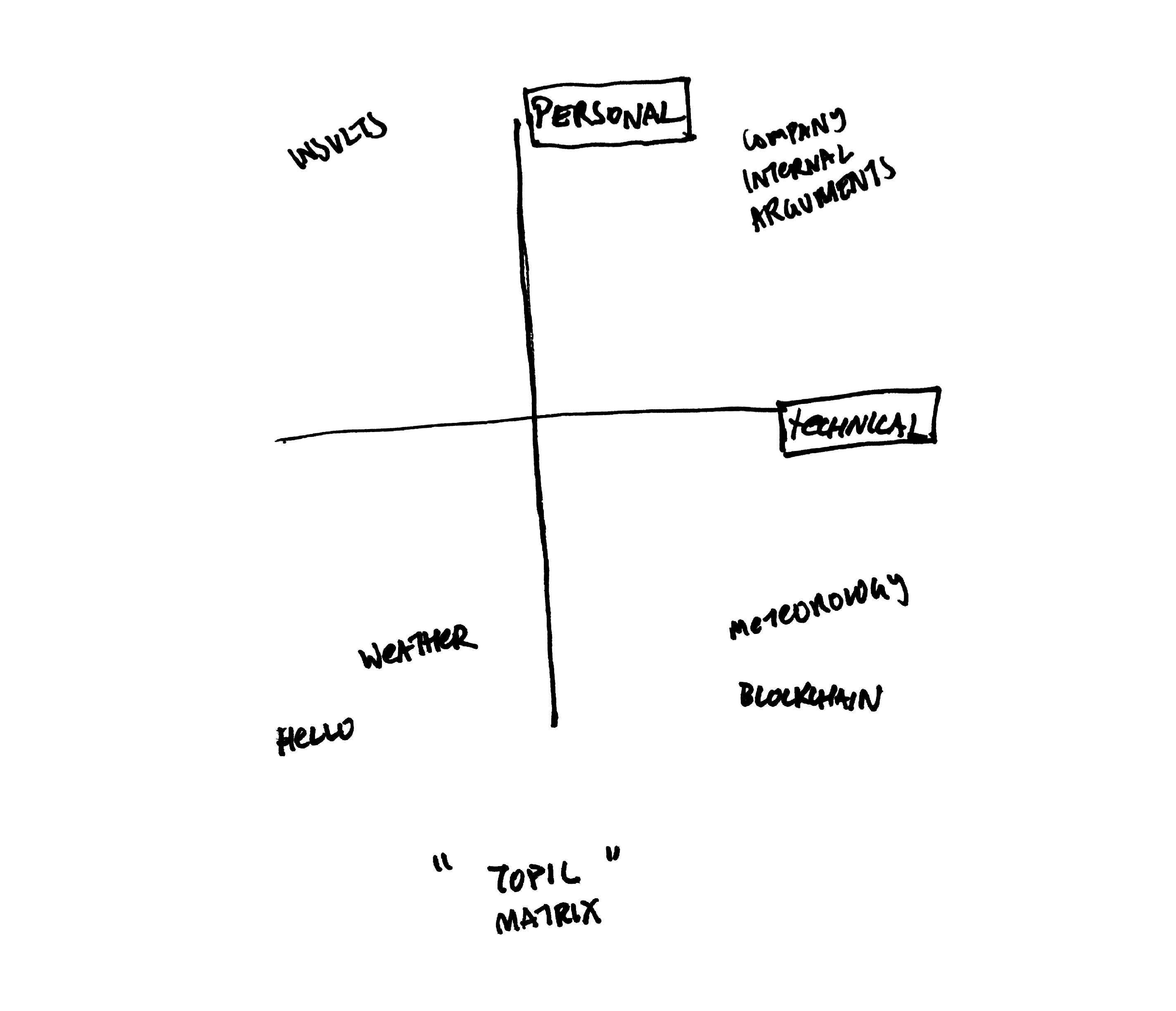

Since conversation is such an important part of parties we wanted to model it in higher fidelity than the other actions. This took the form of having varying topics of conversation and bestowing agents with preferences for particular topics.

We defined a 2D “topic space” or “topic matrix”, where one axis is how “technical” the topic is and the other is how “personal” the topic is. For instance, a low technical, low personal topic might be the weather. A high technical but low personal topic might be the blockchain.

Agents don’t know what topic to talk about with an agent they don’t know, but they a really basic conversation model which allows them to learn (kind of, this needs work). They’ll try different things and try to gauge how the other person responds, and try to remember this.

Social Network

As so far specified, our implementation of agents don’t capture, explicitly at least, the relationships between individual agents. In the context of a social simulation this is obviously pretty important.

For Party Fortress we implemented a really simple social network so we could represent pre-existing friendships and capture forming ones as well. The social network is modified through conversation and the valence and strength of modification is based on what topics people like. For example, if we talk about a topic we both like, our affinity increases in the social graph.

Narrative

It’s not very interesting to watch the simulation with no other indicators of what’s happening. These are people we’re supposed to be simulating and so we have some curiosity and expectations about their internal states.

We implemented a narrative system where agents will report what exactly their doing in colorful ways.

Closing the loop

Our plan for the party was to project the simulation on the wall as the party went on. But that introduces an anomaly where our viewing of the simulation may influence our behavior. We needed the simulation itself to capture this possibility - so we integrated a webcam stream into the simulation and introduced a new action for agents: “gawk”. Now they are free to come and watch us, the “real” world, just as we can watch them.

We have a few other ideas for “closing the loop” that we weren’t able to implement in time for Party Fortress I, such as more direct communication with simulants (e.g. via SMS).

TThhEe PPaARRtTYY

We hosted Party Fortress at Prime Produce, a space that Dan has been working on for some time.

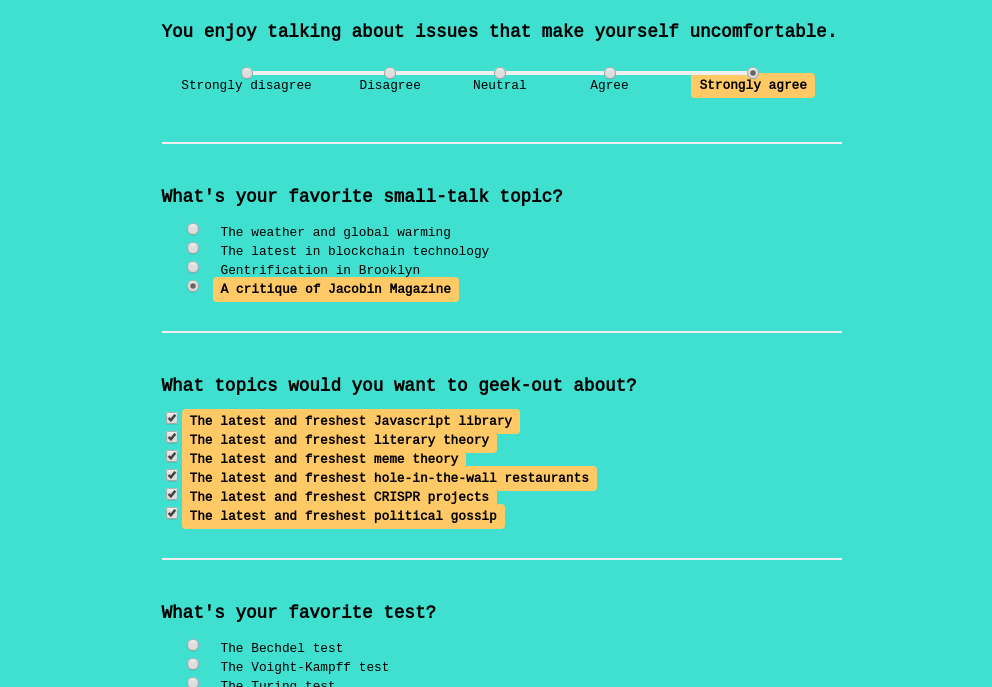

We had guests fill out a questionnaire as they arrived, designed to extract some important personality features. When they submitted questionnaire a version of themselves would appear in the simulation and carry on partying.

There were surprisingly several moments of synchronization between the “real” party and the simulated one. For instance, people talking or eating when the simulation “predicted” it. Some of the topics that were part of the simulation came up independently in conversation (most notably “blockchain”, but that was sort of a given with the crowd at the party). And of course seeing certain topics come up in the simulation spurned those topics coming up outside of it too.

Afterwards our attendees had a lot of good feedback on the experience. Maybe the most important bit of feedback was that the two parties felt too independent; we need to incorporate more ways for party-goers to feel like they can influence the simulated party and vice versa.

It was a good first step - we’re looking to host more of these parties in the future and expand highrise so that it can encompass weirder and more bizarre parties.