Fugue Devlog 18: Character Generation

The character generation system is starting to come together. There are still a few things to figure out, but I think I have the bulk of it figured out.

The character generation system is roughly broken down into four sub-problems:

- Mesh generation: how will the actual geometry of a character be generated?

- Texturing: how will the skin-level features (skin tone, eyes, eyebrows, nose, mouth, close-cut hair, facial hair, tattoos, scars, etc) of a character be generated?

- Clothing/hair: how will additional geometry, like clothing and hair, be generated and mapped to the human mesh?

- Rigging: how will the character’s skeleton be configured?

(A fifth sub-problem could be “animation” but I’ll handle that separately.)

A good system will encompass these sub-problems and also make it easy to:

- generate characters according to specific constraints through a single UI (eventually as a tab in the CMS)

- add or modify clothing, hair, etc

- generate a large amount of variation across characters

In summary, the whole system should be represented by a single configuration screen, and with a single press of a button I can produce a fully rigged and clothes character model. I’m not quite at that point yet but making good progress.

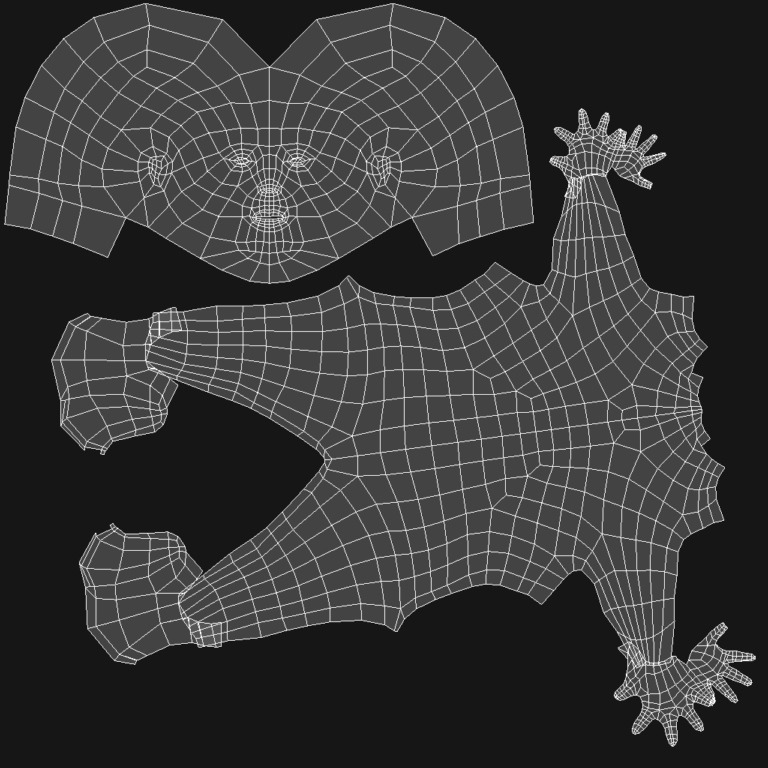

The system so far is all built around the open source MakeHuman, which makes the whole process much simpler. It provides a way to generate parameterized human meshes that supports the easy adding of components like clothing, and it has an addon for working directly within Blender. MakeHuman works by generating a “base mesh” which then can be used with other meshes (“proxies”) that map to the vertices of the base mesh. When the base mesh is transformed–either through animation or through a variety of different body shape/proportion parameters–these proxies are transformed too. Clothes are proxies, but so are “topologies” which replace the base mesh as the human mesh. This allows me to use a custom lower-poly mesh instead of the higher-resolution default mesh.

So MakeHuman takes care of mesh generation, and it provides a way to attach clothing and hair. The clothing and hair still need to be modeled individually, but this is less daunting a task as I figure I’ll only need to create a relatively small amount of clothing forms that each have several different textures. It may be easier to automate the generation of some of these textures, e.g. color variations. In the end this is not too different than your run-of-the-mill modeling and texturing; there are just a couple of extra steps to ensure that the clothes map on to the human mesh correctly.

MakeHuman also generates a rig for the mesh, so that sub-problem may be taken care of too. But because I haven’t figured out the animation process, I don’t know exactly if/how I’ll integrate the auto-generated mesh. For my test characters I’ve just been using Mixamo’s auto-rigger…so for now this one needs more work.

So that leaves texturing, or what I called “skin-level features”. These are features that don’t really have any volume to them, such as tattoos, scars, and undergarments and socks. This isn’t too difficult in theory: you just need to generate an image texture. The approach is to work in layers, and assemble a final texture by sampling/generating different layers for different features. So the bottom-most layer is the skintone, and on top of that you’d paste on layers of underwear, socks, eyes, nose, mouth, etc.

The face is of course very important here, and it’s the hardest to get right. I don’t yet have a good way of generating facial features. While the other parts (socks, undergarments, etc) can be generated by hand because they don’t require a ton of variation (e.g. I could probably get away with like 20 different pairs of socks), faces should be unique per character (PCs and NPCs alike). I would rather not have to create all of these by hand.

I’ve had some success using Stable Diffusion to generate faces to work from but it’s not consistent enough to automate (faces may not be head-on and so require some manual adjusting, for example). I think a parameterized generator might make the most sense here, where, for example, facial features are defined by bezier curves with constrained parameter ranges, and each face is just a sample of that parameter space. There could then be a pool of textures (for skin tones, lip color, eye color, etc) that are also sampled from to fill in the details.

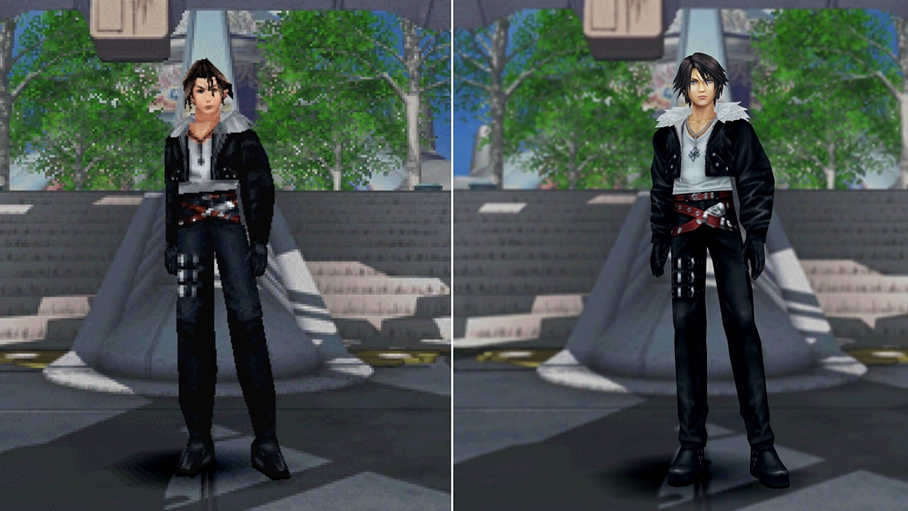

For testing I just created the skin-level texture by hand, just so I could place a character into the game and see if it works visually:

And here is a comparison with the screen effects, without the dithering, and without the resolution downsampling:

The face definitely needs work but I feel ok–but not thrilled–about everything else. It does feel somewhere between the graphics from the original FF8 and its remaster (except for the face), which is sort of what I was going for. I think I need to sit with it for awhile, see how it feels as the rest of the game’s environments develop, and try different character models, clothing, etc. It’s at least a starting point–I feel a bit reassured that I have some method for generating decent-looking characters, one that could be modified if needed.

On this point: I’m kind of hoping that all the models and characters and so on just kind of work together visually, visually but not expecting that to be the case. I’m trying to design this character generation system so that I can make adjustments to e.g. textures, models and have those adjustments propagate through all existing character models. That gives me more latitude to experiment with the game’s visual aesthetic and makes me feel less like I’m committing to a particular one so early on.

This brings me to the actual generation system–everything up to this point is more about producing the assets that are then mix-and-matched to generate the actual characters. I don’t want to allow for totally random character generation because there are combinations that are atypical or implausible. With clothes, for example, people generally don’t wear a dress and pants at the same time, so I want to prevent this particular outfit from being generated (apologies if you do dress this way regularly). A context-free grammar (CFG) makes the most sense to me because it allows you to define set configurations that have variation, thus avoiding these problems of complete randomness.

With a CFG you will essentially define different “outfits”, where each component of the outfit can be drawn from a pre-defined list of options. Say for example I need to generate a lot of road workers. A simple CFG might look like:

RoadWorker:

- HardHat

- TShirt

- HighVisVest

- WorkPants

- WorkBoots

HighVisVest:

- YellowHighVisVest

- OrangeHighVisVest

HardHat:

- YellowHardHat

- WhiteHardHat

- HardHatWithLight

HardHatWithLight:

- WhiteHardHatWithLight

- YellowHardHatWithLight

TShirt:

- RedShirt

- BlueShirt

- BlackShirt

WorkPants:

- CarpenterPants

- Jeans

WorkBoots:

- BrownWorkBoots

- BlackWorkBoots

A CFG is recursive in the sense that, if I want to create a RoadWorker, the program will see that HardHat itself can be expanded into different options. And then the program will see that one of those options, HardHatWithLight, can also be expanded into more options. So it will do this until it’s got all options, and sample from those.

Another feature to add is the ability to draw from the CFG with some fixed options. Like say I’m generating an audience for a group where everyone has to wear an orange beret; I can fix that option the program would only generate characters in an outfit which is allowed to include an orange beret.

Finally, every time a character is generated with the CFG, the resulting model will be saved with the combination of terms used to generate that character (in the case of a RoadWorker that might be YellowHardHat,BlueShirt,OrangeHighVisVest,...). This makes it easy to “regenerate” or update existing characters if one of the underlying models change. That way I can feel free to tweak the textures and models of clothing and other components without needing to manually update every character that I’ve generated so far.

In the near term this will probably all work via a Python script, but it would be amazing to be able to see changes in a character real-time. So a character might be generated through the CFG, but can be hand-adjusted afterwards, e.g. by swapping out that BlueShirt for a BlackShirt, or, if I go the bezier curve route for face features, by adjusting the eye shape for this character, etc. This might be feasible by calling MakeHuman and Blender via their Python interfaces, rendering the model, and then displaying the rendered image, but it sounds really complicated and janky. I’ll have to think on it some more.